Or try one of the following: 詹姆斯.com, adult swim, Afterdawn, Ajaxian, Andy Budd, Ask a Ninja, AtomEnabled.org, BBC News, BBC Arabic, BBC China, BBC Russia, Brent Simmons, Channel Frederator, CNN, Digg, Diggnation, Flickr, Google News, Google Video, Harvard Law, Hebrew Language, InfoWorld, iTunes, Japanese Language, Korean Language, mir.aculo.us, Movie Trailers, Newspond, Nick Bradbury, OK/Cancel, OS News, Phil Ringnalda, Photoshop Videocast, reddit, Romanian Language, Russian Language, Ryan Parman, Traditional Chinese Language, Technorati, Tim Bray, TUAW, TVgasm, UNEASYsilence, Web 2.0 Show, Windows Vista Blog, XKCD, Yahoo! News, You Tube, Zeldman

Claude AI finds 500 high-severity software vulnerabilities | InfoWorld

Technology insight for the enterpriseClaude AI finds 500 high-severity software vulnerabilities 6 Feb 2026, 4:28 pm

Anthropic only released its latest large language model, Claude Opus 4.6, on Thursday, but it has already been using it behind the scenes to identify zero-day vulnerabilities in open-source software.

In the trial, it put Claude inside a virtual machine with access to the latest versions of open source projects, and provided it with a range of standard utilities and vulnerability analysis tools, but no instructions on how to use them nor how specifically to identify vulnerabilities.

Despite this lack of guidance, Opus 4.6 managed to identify a 500 high-severity vulnerabilities. Anthropic staff are validating the findings before reporting the bugs to their developers to ensure the LLM was not hallucinating or reporting false positives, according to company blog post.

“AI language models are already capable of identifying novel vulnerabilities, and may soon exceed the speed and scale of even expert human researchers,” it said.

Anthropic may be keen to improve its reputation in the software security industry, given how its software has already been used to automate attacks.

Other companies are already using AI to handle bug hunting and this is further evidence of the possibilities.

But some software developers are overwhelmed by the number of poor-quality AI-generated bug reports, with at least one shutting its bug-bounty program because of abuse by AI-accelerated bug hunters.

This article originally appeared on CSOonline.com.

Windows PCs fade away 6 Feb 2026, 9:00 am

Last month, I met with a mid-sized law firm facing a common dilemma. Their Windows 10 laptops were nearing the end of support and needed to be replaced. Typically, this meant buying new hardware and software—predictable and straightforward. But this time, Microsoft suggested a different approach: move to Windows 365 Cloud PCs, a PC that operates with a monthly subscription and is accessible from any device, scalable, secure, and AI-enhanced. The catch? The shift from ownership to a subscription model and reduced local control led their IT team to question how “personal” these computers truly were.

Cloud subscriptions replace personal computing

The experience of this law firm encapsulates a major industry shift: Today, you don’t buy Windows, you rent access to it. Windows 365 Cloud PCs began as a business-only experiment at Microsoft but have grown into its central product and are now the primary road map, with local Windows installations becoming a mere stepping stone to cloud-based desktops. With tools like Windows 365 Boot, users can bypass the traditional local operating system altogether, landing directly into a personalized, cloud-streamed environment, even on third-party or bring-your-own devices.

Hardware no longer anchors the user’s experience; the familiar PC is now a portal into a metered utility controlled, updated, and managed by Microsoft. Windows 365 Switch blurs the line even further, allowing seamless migration between cloud and local environments. With each step, more user agency is surrendered in exchange for the convenience of a cloud-managed world.

The AI revolution and hardware

As if the cloud weren’t enough, artificial intelligence is muddying the waters. Microsoft is loud about a future built on AI PCs, touting Copilot integration, neural processing units (NPUs), and specialized hardware. But as Dell’s own product head recently admitted, customers aren’t flocking to buy these new devices for AI alone; the proposition is too abstract, and the day-to-day benefits too unclear. In reality, most significant leaps in AI are happening in the cloud, not on the desktop. Even Jeff Bezos framed the future simplistically: AI will appear everywhere, but it will live in the cloud.

Meanwhile, Microsoft is aggressively pushing its users to rely on its AI-powered tools and ecosystem, with access controlled through subscriptions. Gone is the idea of installing and running your own AI applications locally; instead, users are nudged to rent access to AI services, hosted and updated in Microsoft’s cloud. The notion of the self-managed PC is fast giving way to a persistent, subscription-based rental of power and capability, with AI primarily serving as another tool for vendor lock-in.

Hidden costs and loss of control

Businesses and individuals face new economic realities. The traditional model—investing in hardware for five years—is replaced by an ever-escalating treadmill. A basic Windows 365 Cloud PC costs about $41 a month for 8GB, excluding Office or AI add-ons. Vendors pitch this as a trade-off against the hidden costs and complexity of managing local computers in hybrid work. Before long, subscription fees will become just another line item in ballooning IT expenses.

Perhaps more concerning is the core loss of control. The local PC gave users the keys. They owned, updated, installed, and protected their own digital spaces. The new cloud-and-AI reality puts Microsoft in charge of software, identity, AI tools, and even privacy decisions. The old personal computer offered freedom; the new model is managed, metered, and routinely adjusted to fit Microsoft’s evolving business interests. Yes, security can benefit. Yes, patching and remote management are simplified for companies. But every user now sits one step further removed from the heart of their own computing experience.

Cloud or autonomy

The rapidly approaching future of the Windows PC is no longer just about what’s on your desk, but what you’re permitted—by subscription—to access from the cloud. Microsoft promotes this as inevitable and, to some, the advantages are real. Yet for those uncomfortable with their digital world being defined and priced by a faraway corporation, alternatives remain.

Linux, once a niche for hobbyists and IT professionals, is today’s best option for those desiring true control, security, and transparency over ownership and privacy. The personal computer revolution began with the promise of control and independence; ironically, the rise of cloud, subscription, and vendor-driven AI is reversing those gains.

The law firm from the start of this post now faces a choice that becomes more common every day: buy into the convenience, security, and, yes, the ongoing costs and restrictions of cloud-based, AI-infused subscriptions or invest in platforms and approaches that keep ownership, privacy, and autonomy at the center. For an entire generation raised on the promise of personal computing, it’s a profound—and sobering—inflection point.

Python everywhere—but are we there yet? 6 Feb 2026, 9:00 am

How does Python’s new native JIT stack up against PyPy? Is there life for machine learning applications outside of Python? And can we run our Python apps in WebAssembly yet? Keep reading for answers to these and other burning questions from the Python develop-o-sphere.

Top picks for Python readers on InfoWorld

CPython vs. PyPy: Which Python runtime has the better JIT?

PyPy and its JIT have long been the go-to for developers needing a faster runtime. Now CPython has its own JIT, and seeing how it stacks up against PyPy makes for a fascinating investigation.

AI and machine learning outside of Python

Python might be the most popular major language for data science, but it’s hardly the only one. Where do languages like Java, Rust, and Go fit in the data-science stack?

Run PostgreSQL in Python — no setup required (video)

Take a spin with pgserver: A pip install-able library that contains a fully standalone instance of PostgreSQL.

How to use Rust with Python, and Python with Rust

Oldie but goodie: Get started with the PyO3 project, for merging Python’s convenience with Rust’s speed.

More good reads and Python updates elsewhere

awesome-python-rs: Curated Python resources that use Rust

As Rust and Python deepen their working relationship, it’s worth seeing how many projects span both domains. The list is small right now, but that just makes it easier to dive in and try things out.

Will incremental improvements be the death of Python?

Stefan Marr’s lecture at the Sponsorship Invited Talks 2025 casts a critical eye on Python’s efforts to speed things up and add true parallelism, saying these efforts could introduce new classes of concurrency bugs.

CPython Internals, by ‘zpoint’

A massive set of detailed notes about most every aspect of the CPython runtime: How memory structures are laid out, how garbage collection works, how the C API operates, and so much more.

Slightly off-topic: The PlayStation 2 recompilation project

How’s this for ambitious? A tool in development attempts to recompile PlayStation 2 games to run on modern hardware—not via an emulator, but by translating MIPS R5900 instructions to C++.

Google unveils API and MCP server for developer documentation 6 Feb 2026, 1:52 am

Google is previewing the Developer Knowledge API and an associated Model Context Protocol (MCP) server, which together offer a machine-readable gateway to the company’s official developer documentation.

Announced February 4, the Developer Knowledge API is designed to be the programmatic source of truth for Google’s public documentation. Developers can access documentation from firebase.google.com, developer.android.com, docs.cloud.google.com, and other locations. Developers can search and retrieve the documentation pages as Markdown.

The companion MCP server gives AI-powered development tools the ability to “read” Google developer documentation, enabling more reliable features such as implementation guidance, troubleshooting, and comparative analysis. The Developer Knowledge MCP server can be connected to a user’s IDE or AI assistant. Google said the MCP server is compatible with a wide range of popular assistants and tools, helping to ensure that AI-based models have access to the most accurate, up-to-date information.

Full documentation can be found at developers.google.com. The preview focuses on providing high-quality, unstructured Markdown. As Google moves forward toward general availability, plans call for adding support for structured content such as specific code sample objects and API reference entities. In addition, the corpus will be expanded to include more of Google’s developer documentation and reduce re-indexing latency.

Visual Studio Code update shines on coding agents 5 Feb 2026, 10:13 pm

Microsoft has released Visual Studio Code 1.109, the latest update of the company’s popular code editor. The new release brings multiple enhancements for agents, including improvements for optimization, extensibility, security, and session management.

Released February 4 and also known as the January 2026 release, VS Code 1.109 can be downloaded for Windows, Linux, and macOS at code.visualstudio.com.

Microsoft with this release said it was evolving VS Code to become “the home for multi-agent development.” New session management capabilities allow developers to run multiple agent sessions in parallel across local, background, and cloud environments, all from a single view. Users can jump between sessions, track progress at a glance, and let agents work independently.

Agent Skills, now generally available and enabled by default, allow developers to package specialized capabilities or domain expertise into reusable workflows. Skill folders contain tested instructions for specific domains like testing strategies, API design, or performance optimization, Microsoft said.

A preview feature, Copilot Memory, allows developers to store and recall important information across sessions. Agents work smarter with Copilot Memory and experience faster code search with external indexing, according to Microsoft. Users can enable Copilot Memory by setting github.copilot.chat.copilotMemory.enabled to true.

VS Code 1.109 also adds Claude Agent support, enabling developers to leverage Anthropic’s agent SDK directly. The new release also adds support for MCP apps, which render interactive visualizations in chat. The update also introduces terminal sandboxing, an experimental capability that restricts file and network access for agent-executed commands, and auto-approval rules, which skip confirmation for safe operations.

VS Code 1.109 follows last month’s release of VS Code 1.108, which introduced support for agent skills. Other improvements in VS Code 1.109 include the following:

- For Chat UX, streaming improvements show progress as it happens, while support for thinking tokens in Claude models provide better visibility into what the model is thinking.

- For coding and editing, developers can customize the text color of matching brackets using the new

editorBracketMatch.foregroundcolor theme token. - An integrated browser lets developers preview and inspect localhost sites directly in VS Code, complete with DevTools and authentication support. This is a preview feature.

- Terminal commands in chat now show richer details, including syntax highlighting and working directory. New options let developers customize sticky scroll and use terminals in restricted workspaces.

- A finalized Quick Input button APIs offer more control over input placement and toggle states. Proposed APIs would enable chat model providers to declare configuration schemas.

Databricks adds MemAlign to MLflow to cut cost and latency of LLM evaluation 5 Feb 2026, 10:55 am

Databricks’ Mosaic AI Research team has added a new framework, MemAlign, to MLflow, its managed machine learning and generative AI lifecycle development service.

MemAlign is designed to help enterprises lower the cost and latency of training LLM-based judges, in turn making AI evaluation scalable and trustworthy enough for production deployments.

The new framework, according to the research team, addresses a critical bottleneck most enterprises are facing today: their ability to efficiently evaluate and govern the behavior of agentic systems or the LLMs driving them, even as demand for their rapid deployment continues to rise.

Traditional approaches to training LLM-based judges depend on large, labeled datasets, repeated fine-tuning, or prompt-based heuristics, all of which are expensive to maintain and slow to adapt as models, prompts, and business requirements change.

As a result, AI evaluation often remains manual and periodic, limiting enterprises’ ability to safely iterate and deploy models at scale, the team wrote in a blog post.

MemAlign’s memory-driven alternative to brute-force retraining

In contrast, MemAlign uses a dual memory system that replaces brute-force retraining with memory-driven alignment based on human feedback from human subject matter experts, although fewer in number and frequency than conventional training methods.

Instead of repeatedly fine-tuning models on large datasets, MemAlign separates knowledge into a semantic memory, which captures general evaluation principles, and an episodic memory, which stores task-specific feedback expressed in natural language by subject matter experts, depending on the use case.

This allows LLM judges to rapidly adapt to new domains or evaluation criteria using small amounts of human feedback, while retaining consistency across tasks, the research team wrote.

This reduces the latency and costs required to reach more efficient and stable levels of judgment, making the approach more practical to adapt for enterprises, the team added.

In Databricks-controlled tests, MemAlign was able to show the same efficiency as labeled datasets.

Analysts expect the new framework to benefit enterprises and their development teams.

“For developers, MemAlign helps reduce the brittle prompt engineering trap where fixing one error often breaks three others. It provides a delete or overwrite function for feedback. If a business policy changes, the developer can update or overwrite relevant feedback rather than restarting the alignment process,” said Stephanie Walter, practice leader of AI stack at HyperFRAME Research.

Walter was referring to the framework’s episodic memory, which is stored as a highly scalable vector database, enabling it to handle millions of feedback examples with minimal retrieval latency.

The ability to keep LLM-based judges aligned with changing business requirements, according to Moor Insights and Strategy principal analyst Robert Kramer, is a critical ability as it doesn’t destabilize production systems, which is increasingly important for enterprises as agentic systems scale.

Agent Bricks may soon get MemAlign

Separately, a company spokesperson told InfoWorld that Databricks may soon embed MemAlign to its AI-driven agent development interface, Agent Bricks.

More so because the company feels that the new framework would be more efficient in evaluating and governing agents built on the interface than previously introduced capabilities, such as Agent-as-a-Judge, Tunable Judges, and Judge Builder.

Judge Builder, which was previewed in November last year, is a visual interface to create and tune LLM judges with domain knowledge from subject matter experts and utilizes the Agent-as-a-Judge feature that offers insights into an agent’s trace, making evaluations more accurate.

“While the Judge Builder can incorporate subject matter expert feedback to align its behavior, that alignment step is currently expensive and requires significant amounts of human feedback,” the spokesperson said.

“MemAlign will soon be available in the Judge Builder, so users can build and iterate on their judges much faster and much more cheaply,” the spokesperson added.

The ‘Super Bowl’ standard: Architecting distributed systems for massive concurrency 5 Feb 2026, 10:00 am

In the world of streaming, the “Super Bowl” isn’t just a game. It is a distributed systems stress test that happens in real-time before tens of millions of people.

When I manage infrastructure for major events (whether it is the Olympics, a Premier League match or a season finale) I am dealing with a “thundering herd” problem that few systems ever face. Millions of users log in, browse and hit “play” within the same three-minute window.

But this challenge isn’t unique to media. It is the same nightmare that keeps e-commerce CTOs awake before Black Friday or financial systems architects up during a market crash. The fundamental problem is always the same: How do you survive when demand exceeds capacity by an order of magnitude?

Most engineering teams rely on auto-scaling to save them. But at the “Super Bowl standard” of scale, auto-scaling is a lie. It is too reactive. By the time your cloud provider spins up new instances, your latency has already spiked, your database connection pool is exhausted and your users are staring at a 500 error.

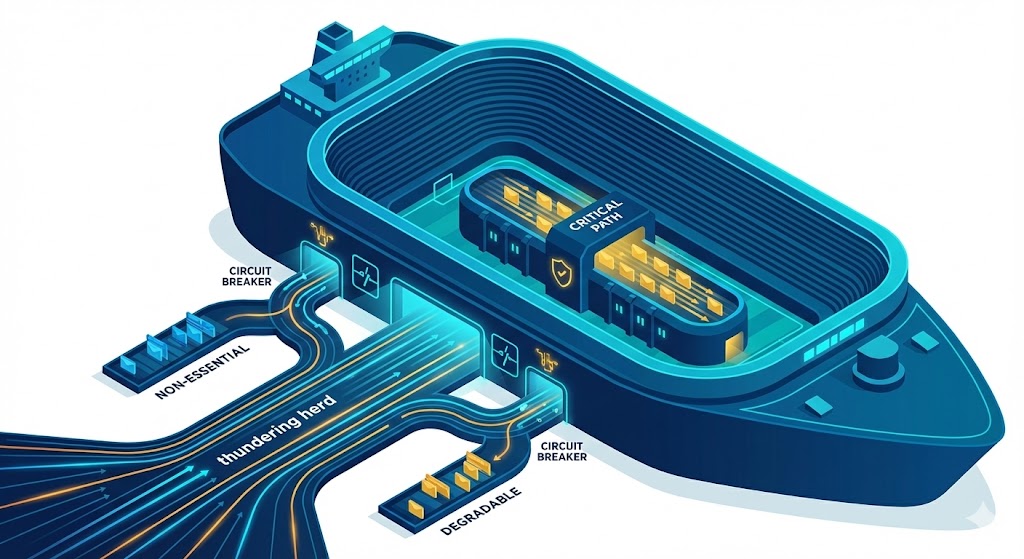

Here are the four architectural patterns we use to survive massive concurrency. These apply whether you are streaming touchdowns or processing checkout queues for a limited-edition sneaker drop.

1. Aggressive load shedding

The biggest mistake engineers make is trying to process every request that hits the load balancer. In a high-concurrency event, this is suicide. If your system capacity is 100,000 requests per second (RPS) and you receive 120,000 RPS, trying to serve everyone usually results in the database locking up and zero people getting served.

We implement load shedding based on business priority. It is better to serve 100,000 users perfectly and tell 20,000 users to “please wait” than to crash the site for all 120,000.

This requires classifying traffic at the gateway layer into distinct tiers:

- Tier 1 (Critical): Login, Video Playback (or for e-commerce: Checkout, Inventory Lock). These requests must succeed.

- Tier 2 (Degradable): Search, Content Discovery, User Profile edits. These can be served from stale caches.

- Tier 3 (Non-Essential): Recommendations, “People also bought,” Social feeds. These can fail silently.

We use adaptive concurrency limits to detect when downstream latency is rising. As soon as the database response time crosses a threshold (e.g. 50ms), the system automatically stops calling the Tier 3 services. The user sees a slightly generic homepage, but the video plays or the purchase completes.

For any high-volume system, you must define your “degraded mode.” If you don’t decide what to turn off during a spike, the system will decide for you, usually by turning off everything.

2. Bulkheads and blast radius isolation

Manoj Yerrasani

On a cruise ship, the hull is divided into watertight compartments called bulkheads. If one section floods, the ship stays afloat. In distributed systems, we often inadvertently build ships with no walls.

I have seen massive outages caused by a minor feature. For example, a third-party API that serves “user avatars” goes down. Because the “Login” service waits for the avatar to load before confirming the session, the entire login flow hangs. A cosmetic feature takes down the core business.

To prevent this, we use the bulkhead pattern. We isolate thread pools and connection pools for different dependencies.

In an e-commerce context, your “Inventory Service” and your “User Reviews Service” should never share the same database connection pool. If the Reviews service gets hammered by bots scraping data, it should not consume the resources needed to look up product availability.

We strictly enforce timeouts and Circuit Breakers. If a non-essential dependency fails more than 50% of the time, we stop calling it immediately and return a default value (e.g. a generic avatar or a cached review score).

Crucially, we prefer semaphore isolation over thread pool isolation for high-throughput services. Thread pools add overhead context switching. Semaphores simply limit the number of concurrent calls allowed to a specific dependency, rejecting excess traffic instantly without queuing. The core transaction must survive even if the peripherals are burning.

3. Taming the thundering herd with request collapsing

Imagine 50,000 users all load the homepage at the exact same second (kick-off time or a product launch). All 50,000 requests hit your backend asking for the same data: “What is the metadata for the Super Bowl stream?”

If you let all 50,000 requests hit your database, you will crush it.

Caching is the obvious answer, but standard caching isn’t enough. You are vulnerable to the “Cache Stampede.” This happens when a popular cache key expires. Suddenly, thousands of requests notice the missing key and all of them rush to the database to regenerate it simultaneously.

To solve this, we use request collapsing (often called “singleflight”).

When a cache miss occurs, the first request goes to the database to fetch the data. The system identifies that 49,999 other people are asking for the same key. Instead of sending them to the database, it holds them in a wait state. Once the first request returns, the system populates the cache and serves all 50,000 users with that single result.

This pattern is critical for “flash sale” scenarios in retail. When a million users refresh the page to see if a product is in stock, you cannot do a million database lookups. You do one lookup and broadcast the result.

We also employ probabilistic early expiration (or the X-Fetch algorithm). Instead of waiting for a cache item to fully expire, we re-fetch it in the background while it is still valid. This ensures the user always hits a warm cache and never triggers a stampede.

4. The ‘game day’ rehearsal

The patterns above are theoretical until tested. In my experience, you do not rise to the occasion during a crisis; you fall to the level of your training.

For the Olympics and Super Bowl, we don’t just hope the architecture works. We break it on purpose. We run game days where we simulate massive traffic spikes and inject failures into the production environment (or a near-production replica).

We simulate specific disaster scenarios:

- What happens if the primary Redis cluster vanishes?

- What happens if the recommendation engine latency spikes to 2 seconds?

- What happens if 5 million users log in within 60 seconds?

During these exercises, we validate that our load shedding actually kicks in. We verify that the bulkheads actually stop the bleeding. Often, we find that a default configuration setting (like a generic timeout in a client library) undoes all our hard work.

For e-commerce leaders, this means running stress tests that exceed your projected Black Friday traffic by at least 50%. You must identify the “breaking point” of your system. If you don’t know exactly how many orders per second breaks your database, you aren’t ready for the event.

Resilience is a mindset, not a tool

You cannot buy “resilience” from AWS or Azure. You cannot solve these problems just by switching to Kubernetes or adding more nodes.

The “Super Bowl Standard” requires a fundamental shift in how you view failures. We assume components will fail. We assume the network will be slow. We assume users will behave like a DDoS attack.

Whether you are building a streaming platform, a banking ledger or a retail storefront, the goal is not to build a system that never breaks. The goal is to build a system that breaks partially and gracefully so that the core business value survives.

If you wait until the traffic hits to test these assumptions, it is already too late.

This article is published as part of the Foundry Expert Contributor Network.

Want to join?

What is context engineering? And why it’s the new AI architecture 5 Feb 2026, 9:00 am

Context engineering is the practice of designing systems that determine what information an AI model sees before it generates a response to user input. It goes beyond formatting prompts or crafting instructions, instead shaping the entire environment the model operates in: grounding data, schemas, tools, constraints, policies, and the mechanisms that decide which pieces of information make it into the model’s input at any moment. In applied terms, good context engineering means establishing a small set of high-signal tokens that improve the likelihood of a high-quality outcome.

Think of prompt engineering as a predecessor discipline to context engineering. While prompt engineering focuses on wording, sequencing, and surface-level instructions, context engineering extends the discipline into architecture and orchestration. It treats the prompt as just one layer in a larger system that selects, structures, and delivers the right information in the right format so that an LLM can plausibly accomplish its assigned task.

What does ‘context’ mean in AI?

In AI systems, context refers to everything an a large language model (LLM) has access to when producing a response — not just the user’s latest query, but the full envelope of information, rules, memory, and tools that shape how the model interprets that query. The total amount of information the system can process at once is called the context window. The context consists of a number of different layers that work together to guide model behavior:

- The system prompt defines the model’s role, boundaries, and behavior. This layer can include rules, examples, guardrails, and style requirements that persist across turns.

- A user prompt is the immediate request — the short-lived, task-specific input that tells the model what to do right now.

- State or conversation history acts as short-term memory, giving the model continuity across turns by including prior dialog, reasoning steps, and decisions.

- Long-term memory is persistent and spans many sessions. It contains durable preferences, stable facts, project summaries, or information the system is designed to reintroduce later.

- Retrieved information provides the model with external, up-to-date knowledge by pulling relevant snippets from documents, databases, or APIs. Retrieval-augmented generation turns this into a dynamic and domain-specific knowledge layer.

- Available tools consist of the actions an LLM is capable of performing with the help of tool calling or MCP servers: function calls, API endpoints, and system commands with defined inputs and outputs. These tools help the model take actions rather than only produce text.

- Structured output definitions that tell the model exactly how its response should be formatted — for example, requiring a JSON object, a table, or a specific schema.

Together, these layers form the full context an AI system uses to generate responses that are hopefully accurate and grounded. However, a host of difficulties with context in AI can lead to suboptimal results.

What is context failure?

The term “context failure” describes a set of common breakdown modes when AI context systems go wrong. These failures fall into four main categories:

- Context poisoning happens when a hallucination or other factual error slips into the context and then gets used as if it were truth. Over time, the model builds on that flawed premise, compounding mistakes and derailing reasoning.

- Context distraction occurs when the context becomes too large or verbose. Instead of reasoning from training data, the model can overly focus on the accumulated history — repeating past actions or clinging to old information rather than synthesizing a fresh, relevant answer.

- Context confusion arises when irrelevant material — extra tools, noisy data, or unrelated content — creeps into context. The model may treat that irrelevant information as important, leading to poor outputs or incorrect tool calls.

- Context clash occurs when new context conflicts with earlier context. If information is added incrementally, earlier assumptions or partial answers may contradict later, clearer data — resulting in inconsistent or broken model behavior.

One of the advances that AI players like OpenAI and Anthropic have offered for their chatbots are the capability to handle increasingly large context windows. But size isn’t everything, and indeed larger windows can be more prone to the sorts of failures described here. Without deliberate context management — validation, summarization, selective retrieval, pruning, or isolation — even large context windows can produce unreliable or incoherent outcomes.

What are some context engineering techniques and strategies?

Context engineering aims to overcome these types of context failures. Here are some of the main techniques and strategies to apply:

- Knowledge base or tool selection. Choose external data sources, databases, documents or tools the system should draw from. A well-curated knowledge base directs retrieval toward relevant content and reduces noise.

- Context ordering or compression. Decide which pieces of information deserve space and which should be shortened or removed. Systems often accumulate far more text than the model needs, so pruning or restructuring keeps the high-signal material while dropping noise. For instance, you could replace a 2,000-word conversation history with a 150-word summary that preserves decisions, constraints, and key facts but omits chit-chat and digressions. Or you could sort retrieved documents by relevance score and inject only the top two chunks instead of all twenty. Both approaches keep the context window focused on the information most likely to produce a correct response.

- Long-term memory storage and retrieval design. Defines how persistent information — including user preferences, project summaries, domain facts, or outcomes from prior sessions — is saved and reintroduced when needed. A system might store a user’s preferred writing style once and automatically reinsert a short summary of that preference into future prompts, instead of requiring the user to restate it manually each time. Or it could store the results of a multi-step research task so the model can recall them in later sessions without rerunning the entire workflow.

- Structured information and output schemas. These allow you to provide predictable formats for both context and responses. Giving the model structured context — such as a list of fields the user must fill out or a predefined data schema — reduces ambiguity and keeps the model from improvising formats. Requiring structured output does the same: for instance, demanding that every answer conform to a specific JSON shape lets downstream systems validate and consume the output reliably.

- Workflow engineering. You can link multiple LLM calls, retrieval steps, and tool actions into a coherent process. Rather than issuing one giant prompt, you design a sequence: gather requirements, retrieve documents, summarize them, call a function, evaluate the result, and only then generate the final output. Each step injects just the right context at the right moment. A practical example is a customer-support bot that first retrieves account data, then asks the LLM to classify the user’s issue, then calls an internal API, and only then composes the final message.

- Selective retrieval and retrieval-augmented generation. This technique applies filtering so the model sees only the parts of external data that matter. Instead of feeding the model an entire knowledge base, you retrieve only the paragraphs that match the user’s query. One common example is chunking documents into small sections, ranking them by semantic relevance, and injecting only the top few into the prompt. This keeps the context window small while grounding the answer in accurate information.

Together, these approaches allow context engineering to deliver a tighter, more relevant, and more reliable context window for the model — minimizing noise, reducing the risk of hallucination or confusion, and giving the model the right tools and data to behave predictably.

Why is context engineering important for AI agents?

Context engineering gives AI agents the information structure they need to operate reliably across multiple steps and decisions. Strong context design treats the prompt, the memory, the retrieved data, and the available tools as a coherent environment that drives consistent behavior. Agents depend on this environment because context is a critical but limited resource for long-horizon tasks.

Agents fail most often when their context becomes polluted, overloaded, or irrelevant. Small errors in early turns can accumulate into large failures when the surrounding context contains hallucinations or extraneous details. Good context engineering improves their efficiency by giving them only the information they need while filtering out noise. Techniques like ranked retrieval and selective memory keep the context window focused, reducing unnecessary token load and improving responsiveness.

Context also enables statefulness — that is, the ability for agents to remember preferences, past actions, or project summaries across sessions. Without this scaffolding, agents behave like one-off chatbots rather than systems capable of long-term adaptation.

Finally, context engineering is what allows agents to integrate tools, call functions, and orchestrate multi-step workflows. Tool specifications, output schemas, and retrieved data all live in the context, so the quality of that context determines whether the agent can act accurately in the real world. In tool-integrated agent patterns, the context is the operating environment where agents reason and take action.

Context engineering guides

Want to learn more? Dive deeper into these resources:

- LlamaIndex’s “What is context engineering — what it is and techniques to consider“: A solid foundational guide explaining how context engineering expands on prompt engineering, and breaking down the different types of context that need to be managed.

- Anthropic’s “Effective context engineering for AI agents”: Explains why context is a finite but critical resource for agents, and frames context engineering as an essential design discipline for robust LLM applications.

- SingleStore’s “Context engineering: A definitive guide”: Walks you through full-stack context engineering: how to build context-aware, reliable, production-ready AI systems by integrating data, tools, memory, and workflows.

- PromptingGuide.ai’s “Context engineering guide”: Offers a broader definition of context engineering (across LLM types, including multimodal), and discusses iterative processes to optimize instructions and context for better model performance.

- DataCamp’s “Context engineering: A guide with examples“: Useful primer that explains different kinds of context (memory, retrieval, tools, structured output), helping practitioners recognize where context failures occur and how to avoid them.

- Akira.ai’s “Context engineering: Complete guide to building smarter AI systems“: Emphasizes context engineering’s role across use cases from chatbots to enterprise agents, and stresses the differences with prompt engineering for scalable AI systems.

- Latitude’s “Complete guide to context engineering for coding agents”: Focuses specifically on coding agents and how context engineering helps them handle real-world software development tasks accurately and consistently

These guides form a strong starting point if you want to deepen your understanding of context engineering — what it is, why it matters, and how to build context-aware AI systems in practice. As models grow more capable, mastering context engineering will increasingly separate toy experiments from reliable, production-grade agents.

Beyond NPM: What you need to know about JSR 5 Feb 2026, 9:00 am

NPM, the Node Package Manager, hosts millions of packages and serves billions of downloads annually. It has served well over the years but has its shortcomings, including with TypeScript build complexity and package provenance. Recently, NPM’s provenance issues have resulted in prominent security breaches, leading more developers to seek alternatives.

The JavaScript Registry (JSR), brought to us by Deno creator Ryan Dahl, was designed to overcome these issues. With enterprise adoption already underway, it is time to see what the next generation of JavaScript packaging looks like.

The intelligent JavaScript registry

Whether or not you opt to use it right away, there are two good reasons to know about JSR. First, it addresses technical issues in NPM, which are worth exploring in detail. And second, it’s already gathering steam, and may reach critical mass toward ubiquity in the near future.

JSR takes a novel approach to resolving known issues in NPM. For one thing, it can ship you compiled (or stripped) JavaScript, even if the original package is TypeScript. Or, if you are using a platform that runs TypeScript directly (like Deno), you’ll get the TypeScript. It also makes stronger guarantees and offers security controls you won’t find in NPM. Things like package authentication and metadata tracking are more rigorous. JSR also handles the automatic generation of package documentation.

Every package consumer will appreciate these features, but they are even more appealing to package creators. Package authors don’t need to run a build step for TypeScript, and the process of publishing libraries is simpler and easier to reproduce. That’s probably why major shops like OpenAI and Supabase are already using JSR.

JSR responds to package requests based on your application setup and requires little additional overhead. It is also largely (and seamlessly) integrated with NPM. That makes trying it out relatively easy. JSR feels more like an NPM upgrade than a replacement.

How JSR works

How to handle TypeScript code in a JavaScript stack has become a hot topic lately. Mixing TypeScript and JavaScript is popular in development, but leads to friction during the build step, where it necessitates compiling the TypeScript code beforehand. Making type stripping a permanent feature in TypeScript could eventually solve that issue, but JSR resolves it today, by offloading compilation to the registry.

JSR is essentially designed as a superset of the NPM registry, but one that knows the difference between TypeScript and JavaScript.

When a package author publishes to JSR, they upload the TypeScript source directly, with no build step required. JSR then acts as the intelligent middle layer. If you request a package from a Deno environment, JSR serves the package in the original TypeScript version. But if you request it from a Node environment (via npm install), JSR automatically transpiles the code to ESM (ECMAScript Modules)-compliant JavaScript and generates the necessary type definition (.d.ts) files on the fly.

This capability eliminates the so-called TypeScript tax that has plagued library authors for years. There’s no need to configure complex build pipelines or maintain separate distribution folders. You simply publish code, and the registry adapts it to the consumer’s runtime.

It’s also important to know that JSR has adopted the modern ESM format as its module system. That isn’t a factor unless you are relying on an older package based in CommonJS (CJS). Using CJS with JSR is possible but requires extra work. Adopting ESM is a forward-looking move by the JSR team, supporting the transition of the JavaScript ecosystem to one approved standard: ESM.

The ‘slow types’ tradeoff

To make its real-time transpilation possible, JSR introduces a constraint known as “no slow types.” JSR forbids global type inference for exported functions and classes. You must explicitly type your exports (e.g., by defining a function’s return type rather than letting the compiler guess it). This is pretty much a common best practice, anyway.

Explicitly defining return types allows JSR to generate documentation and type definitions instantly without running a full, slow compiler pass for every install. It’s a small tradeoff in developer experience for a massive performance gain in the ecosystem.

Security defaults

Perhaps most handy for enterprise users, JSR tackles supply-chain security directly, through provenance. It integrates with GitHub Actions via OpenID Connect.

When a package is published, JSR generates a transparency log (using Sigstore). The log cryptographically links the package in the registry to the specific commit and CI (continuous integration) run that created it. Unlike the “blind trust” model of the legacy NPM registry, JSR lets you verify that the code you are installing came from the source repository it claims.

This also makes it easier for package creators to provide the security they want without a lot of extra wrangling. What NPM has recently tried to backfill via trusted publishing, JSR has built in from the ground up.

Hands-on with the JavaScript Registry

The best part of JSR is that it doesn’t require you to abandon your current tooling. You don’t need to install a new CLI or switch to Deno to use it. It works with npm, yarn, and pnpm right out of the box via a clever compatibility layer.

There are three steps to adding a JSR package to a standard Node project.

1. Ask JSR to add the package

Instead of the install command, you use the JSR tool to add the package. For example, to add a standard library date helper, you would enter:

npx jsr add @std/datetimeHere, we use the NPX command to do a onetime run of the jsr tool with the add command. This adds the datetime library to the NPM project in the working directory.

2. Let JSR configure the package

Behind the scenes, the npx jsr add command creates an .npmrc file in your project root that maps the @jsr scope to the JSR registry (we will use this alias in a moment):

@jsr:registry=https://npm.jsr.ioYou don’t have to worry about this mapping yourself; it just tells NPM, “When you see a @jsr package, talk to JSR, not the public NPM registry.”

3. Let JSR add the dependency

Next, JSR adds the package to your package.json using an alias. The dependency will look something like this:

"dependencies": {

"@std/datetime": "npm:@jsr/std__datetime@^0.224.0"

}Again, you as developer won’t typically need to look at this. JSR lets you treat the dependency as a normal one, while NPM and JSR do the work of retrieving it. In your program’s JavaScript files, the dependency looks like any other import:

import { format } from "@std/datetime";JSR and the evolution of JavaScript

JSR comes from the same mind that gave us Node.js: Ryan Dahl. In his well-known 2018 talk, 10 things I regret about Node.js, Dahl discussed ways the ecosystem had drifted from web standards and spoke on the complexity of node_modules. He also addressed how opaque the Node build process could be.

Dahl initially attempted to correct these issues by creating Deno, a secure runtime that uses standard URLs for imports. But JSR is a more pragmatic pivot. While it is possible to change the runtime you use, the NPM ecosystem of 2.5 million packages is too valuable to abandon.

JSR is effectively a second chance to get the architecture right. It applies Deno’s core philosophies (security by default and first-class TypeScript) to the broader JavaScript world. Importantly, to ensure it serves the whole ecosystem (not only Deno users), the project has moved to an independent open governance board, ensuring it remains a neutral utility for all runtimes.

The future of JSR and NPM

Is JSR on track to be an “NPM killer”? Not yet; maybe never. Instead, it acts as a forcing function for the evolving JavaScript ecosystem. It solves the specific, painful problems of TypeScript publishing and supply-chain security that NPM was never designed to handle.

For architects and engineering leads, JSR represents a safe bet for new internal tooling and libraries, especially those written in TypeScript. JSR’s interoperability with NPM ensures the risk of lock-in is near zero. If JSR disappears tomorrow, you’ll still have the source code.

But the real value of JSR is simpler: It lets developers write code, not build scripts. In a world where configuration often eats more time than logic, that alone makes JSR worth exploring.

How to reduce the risks of AI-generated code 5 Feb 2026, 9:00 am

Vibe coding is the latest tech accelerator, and yes, it kind of rocks. New AI-assisted coding practices are helping developers ship new applications faster, and they’re even allowing other business professionals to prototype workflows and tools without waiting for a full engineering cycle.

Using a chatbot and tailored prompts, vibe coders can build applications in a flash and get them into production within days. Gartner even estimates that by 2028, 40% of new enterprise software will be built with vibe coding tools and techniques, rather than traditional, human-led waterfall or agile software development methods. The speed is intoxicating, so I, for one, am not surprised by that prediction.

The challenge here is that when those who aren’t coders—and even some of those who do work with code for a living—get an application that does exactly what they want, they think the work is over. In truth, it has only just begun.

After the app, then comes the maintenance: updating the app, patching it, scaling it, and defending it. And before you expose real users and data to risk, you must first understand the route that AI took to get your new app working.

How vibe coding works

Vibe coding tools and applications are built on large language models (LLMs) and trained on existing code and patterns. You prompt the model with ideas for your application and, in turn, it generates artifacts like code, configurations, UI components, etc. When you try to run the code, or look at the application’s front end, you’ll see one of two things: the application will look and run the way you were expecting, or an error message will be generated. Then comes the iterative phase, tweaking or changing code until you finally get the desired outcome.

Ideally, the end result is a working app that follows software development best practices based on what AI has learned and produced before. However, AI might just help you produce an application that functions and looks great, but is fragile, inefficient, or insecure at the foundational level.

The biggest issue is that this approach does not often account for the learned security and engineering experience that is needed to operate securely in production, where attackers, compliance requirements, customer trust, and operational scale all converge at once. So, if you’re not a security professional or accustomed to developing apps, what do you do?

Just because it works doesn’t mean it’s ready

The first step to solving a problem is knowing that it exists. A vibe-coded prototype can be a win as a proof of concept, but the danger is in treating it as production-ready.

Start with awareness. Use existing security frameworks to check that your application is secure. Microsoft’s STRIDE threat model is a practical way to sanity-check a vibe-coded application before it goes live. STRIDE stands for:

- Spoofing

- Tampering

- Repudiation

- Information disclosure

- Denial of service

- Elevation of privilege

Use STRIDE as a guide to ask yourself the uncomfortable questions before someone else does. For example:

- Can someone pretend to be another user?

- Does the app leak data through errors, logs, or APIs?

- Are there rate limits and timeouts, or can requests be spammed?

To prevent those potential issues, you can check that your new vibe-coded application handles identities correctly and is secure by default. On top of this, you should make sure that the app code doesn’t have any embedded credentials that others can access.

These real-world concerns are common to all applications, whether they’re built by AI or humans. Being aware of issues preemptively allows you to take practical steps toward a more robust defense. This takes you from “it works” to “we understand how it could fail.”

Humans are still necessary for good vibes

Regardless of your personal opinion on vibe coding, it’s not going anywhere. It helps developers and line-of-business teams build what they need (or want), and that is useful. That newfound freedom and ability to create apps, however, must be matched with awareness that security is necessary and cannot be assumed.

The goal of secure vibe coding isn’t to kill momentum—it’s to keep the speed of innovation high and reduce the potential blast radius for threats.

Whatever your level of experience with AI-assisted coding, or even coding in general, there are tools and practices you can use to ensure your vibe-coded applications are secure. When these applications are developed quickly, any security steps must be just as fast-paced and easy to implement. This begins with taking responsibility for your code from the start, and then maintaining it over time. Start on security early–ideally, as you plan your application and begin its initial reviews. Earlier is always better than trying to bolt security on afterward.

After your vibe-coded app is complete and you’ve done some initial security due diligence, you can then look into your long-term approach. While vibe coding is great for testing or initial builds, it is not often the best approach for full-scale applications that must be able to support a growing number of users. At this point, you can implement more effective threat modeling and automated safety guardrails for more effective security. Bring in a developer or engineer while you’re at it, too.

There are many other security best practices to begin following at this point in the process, too. Using software scanning tools, for example, you can see what your application relies on in terms of software packages and/or additional tools, and then check that list for potential vulnerabilities. Alongside evaluating third-part risk, you can move to CI/CD pipeline security checks, such as blocking hardcoded secrets with pre-commit hooks. You can also use metadata around any AI-assisted contributions within the application to show what was written with AI, which models were used to generate that code, and which LLM tools were involved in building your application.

Ultimately, vibe coding helps you build quickly and deploy what you want to see in the world. And while speed is great, security should be non-negotiable. Without the right security practices in place, vibe coding opens you up to a swarm of preventable problems, a slough of undue risk, or worse.

—

New Tech Forum provides a venue for technology leaders—including vendors and other outside contributors—to explore and discuss emerging enterprise technology in unprecedented depth and breadth. The selection is subjective, based on our pick of the technologies we believe to be important and of greatest interest to InfoWorld readers. InfoWorld does not accept marketing collateral for publication and reserves the right to edit all contributed content. Send all inquiries to doug_dineley@foundryco.com.

Deno Sandbox launched for running AI-generated code 5 Feb 2026, 9:00 am

Deno Land, maker of the Deno runtime, has introduced Deno Sandbox, a secure environment built for code generated by AI agents. The company also announced the long-awaited general availability of Deno Deploy, a serverless platform for running JavaScript and TypeScript applications. Both were announced on February 3.

Now in beta, Deno Sandbox offers lightweight Linux microVMs running as protected environments in the Deno Deploy cloud. Deno Sandbox defends against prompt injection attacks, the company said, where a user or AI attempts to run malicious code. Secrets such as API keys never enter the sandbox and will only appear when an outbound HTTP request is sent to a pre-approved host, according to the company.

Deno Sandbox was created in response to the rise in AI-driven development, explained Deno co-creator Ryan Dahl, as more LLM-generated code is being released with the ability to call external APIs using real credentials, without human review. In this scenario, he wrote, “Sandboxing the compute isn’t enough. You need to control network egress and protect secrets from exfiltration.” Deno Sandbox provides both, according to Dahl. It specializes in workloads where code must be generated, evaluated, or safely executed on behalf of an untrusted user.

Developers can create a Deno Sandbox programmatically via Deno’s JavaScript or Python SDKs. The announcement included the following workload examples for Deno Sandbox:

- AI agents and copilots that need to run code as they reason

- Secure plugin or extension systems

- Vibe-coding and collaborative IDE experiences

- Ephemeral CI runners and smoke tests

- Customer-supplied or user-generated code paths

- Instant dev servers and preview environments

Also announced on February 3 was the general availability of Deno Deploy, a platform for running JavaScript and TypeScript applications in the cloud or on a user’s own infrastructure. It provides a management plane for deploying and running applications with the built-in CLI or through integrations such as GitHub Actions, Deno said. The platform is a rework of Deploy Classic and has a new dashboard, and a new execution environment that uses Deno 2.0.

Apple’s Xcode 26.3 brings integrated support for agentic coding 4 Feb 2026, 7:46 pm

Apple is previewing Xcode 26.3 with integrated support for coding agents such as Anthropic’s Claude Agent and OpenAI’s Codex.

Announced February 3, Xcode 26.3, the Apple-platforms-centered IDE, is available as a release candidate for members of the Apple Developer Program, with a release coming soon to the App Store. This latest version expands on intelligence features introduced in Xcode 26 in June 2025, which offered a coding assistant for writing and editing in the Swift language.

In Xcode version 26.3, coding agents have access to more of Xcode’s capabilities. Agents like Codex and Claude Agent can work autonomously throughout the development lifecycle, supporting streamlined workflows and faster iteration. Agents can search documentation, explore file structures, update project settings, and verify their work visually by capturing Xcode previews and iterating through fixes and builds, according to Apple. Developers now can incorporate agents’ advanced reasoning directly into their development workflow.

Combining the power of these agents with Xcode’s native capabilities provides the best results when developing for Apple platforms, according to Apple. Also new in Xcode 26.3 is support for Model Context Protocol (MCP), an open standard that gives developers the flexibility to use any compatible agent or tool with Xcode.

GitHub eyes restrictions on pull requests to rein in AI-based code deluge on maintainers 4 Feb 2026, 11:53 am

GitHub helped open the floodgates to AI-written code with its Copilot. Now it’s considering closing the door, at least in part, for the short term.

GitHub is exploring what already seems like a controversial idea that would allow maintainers of repositories or projects to delete pull requests (PRs) or turn off the ability to receive pull requests as a way to address an influx of low-quality, often AI-generated contributions that many open-source projects are struggling to manage.

Last week, GitHub product manager Camilla Moraes posted a community discussion thread on GitHub seeking feedback on the solutions that it was mulling in order to address the “increasing volume of low-quality contributions” that was creating significant operational challenges for maintainers of open source projects and repositories.

“We’ve been hearing from you that you’re dedicating substantial time to reviewing contributions that do not meet project quality standards for a number of reasons — they fail to follow project guidelines, are frequently abandoned shortly after submission, and are often AI-generated,” Moraes wrote before listing out the solutions being planned.

AI is breaking the trust model of code review

Several users in the discussion thread agreed with Moraes’ principle that AI-generated code was creating challenges for maintainers.

Jiaxiao Zhou, a software engineer on Microsoft’s Azure Container Upstream team and maintainer of Containerd’s Runwasi project and SpinKube, for one, pointed out that AI-generated code was making it unsustainable for maintainers to review line by line for any code that is shipped.

The maintainer lists several reasons, such as a breakdown in the trust model behind code reviews, where reviewers can no longer assume contributors fully understand what they submit; the risk that AI-generated pull requests may appear structurally sound while being logically flawed or unsafe; and the fact that line-by-line reviews remain mandatory for production code but do not scale to large, AI-assisted changes.

To address these challenges in the short term, Moraes wrote that GitHub was planning to provide configurable pull request permissions, meaning the ability to control pull request access at a more granular level by restricting contributions to collaborators only or disabling PRs for specific use cases like mirror repositories.

“This also eliminates the need for custom automations that various open source projects are building to manage contributions,” Moraes wrote.

Community pushes back on disabling or deleting pull requests

However, the specific suggestion on disabling PRs was met with skepticism.

A user with the handle ThiefMaster suggested that GitHub should not look at restricting access to previously opened PRs as it may lead to loss of content or access for someone. Rather, the user suggested that GitHub should allow users to access them with a direct link.

Moraes, in response, seemed to align with the user’s view and said that GitHub may include the user’s suggestion.

In addition, GitHub is also mulling the idea of providing maintainers with the ability to remove spam or low-quality PRs directly from the interface to improve repository organization.

This suggestion was met with even more skepticism from users.

While ThiefMaster suggested that GitHub should allow a limited timeframe for a maintainer to delete a PR, most likely due to low activity, other users, such as Tibor Digana, Hayden, and Matthew Gamble, were completely against the idea.

The long-term suggestions from Moraes, which included using AI-based tools to help maintainers weed out “unnecessary” submissions and focus on the worthy ones, too, received considerable flak.

While Moraes and GitHub argue that these AI-based tools would cut down the time required to review submissions, users, such as Stephen Rosen, argue that AI-based tools would do quite the opposite because they are prone to hallucinations, forcing the maintainer to go through each line of code anyways.

AI should reduce noise, not add uncertainty

Paul Chada, co-founder of agentic AI software startup Doozer AI, argued that the usefulness of AI-based review tools will hinge on the strength of the guardrails and filters built into them.

Without those controls, he said, such systems risk flooding maintainers with submissions that lack project context, waste review time, and dilute meaningful signal.

“Maintainers don’t want another system they have to second-guess. AI should act like a spam filter or assistant, not a reviewer with authority. Used carefully, it reduces noise. Used carelessly, it adds a new layer of uncertainty instead of removing one,” Chada said.

GitHub has also made other long-term suggestions to address the cognitive load on reviewers, such as improved visibility and attribution when AI tools are used throughout the PR lifecycle, and more granular controls for determining who can create and review PRs beyond blocking all users or restricting to collaborators only.

Azure outage disrupts VMs and identity services for over 10 hours 4 Feb 2026, 11:36 am

Microsoft’s Azure cloud platform suffered a broad multi-hour outage beginning on Monday evening, disrupting two critical layers of enterprise cloud operations. The outage, which lasted over 10 hours, began at 19:46 UTC on Monday and was resolved by 06:05 UTC on Tuesday.

The incident initially left customers unable to deploy or scale virtual machines in multiple regions. This was followed by a related platform issue with the Managed Identities for Azure Resources service in the East US and West US regions between 00:10 UTC and 06:05 UTC on Tuesday. The disruption also briefly affected GitHub Actions.

Policy change at the core of disruption

A policy change unintentionally applied to a subset of Microsoft-managed storage accounts, including those used to host virtual machine extension packages, led to this outage. The change blocked public read access that disrupted scenarios such as virtual machine extension package downloads, according to Microsoft’s status history.

Logged under tracking ID FNJ8-VQZ, some customers experienced failures when deploying or scaling virtual machines, including errors during provisioning and lifecycle operations. Other services were impacted as well.

Azure Kubernetes Service users experienced failures in node provisioning and extension installation, while Azure DevOps and GitHub Actions users faced pipeline failures when tasks required virtual machine extensions or related packages. Operations that required downloading extension packages from Microsoft-managed storage accounts also saw degraded performance.

Although an initial mitigation was deployed within about two hours, it triggered a second platform issue involving Managed Identities for Azure Resources. Customers attempting to create, update, or delete Azure resources, or acquire Managed Identity tokens, began experiencing authentication failures.

Microsoft’s status history page, logged under tracking ID M5B-9RZ, acknowledged that following the earlier mitigation, a large spike in traffic overwhelmed the managed identities platform service in the East US and West US regions.

This impacted the creation and use of Azure resources with assigned managed identities, including Azure Synapse Analytics, Azure Databricks, Azure Stream Analytics, Azure Kubernetes Service, Microsoft Copilot Studio, Azure Chaos Studio, Azure Database for PostgreSQL Flexible Servers, Azure Container Apps, Azure Firewall, and Azure AI Video Indexer.

After multiple infrastructure scale-up attempts failed to handle the backlog and retry volumes, Microsoft ultimately removed traffic from the affected service to repair the underlying infrastructure without load.

“The outage didn’t just take websites offline, but it halted development workflows and disrupted real-world operations,” said Pareekh Jain, CEO at EIIRTrend & Pareekh Consulting.

Cloud outages on the rise

Cloud outages have become more frequent in recent years, with major providers such as AWS, Google Cloud, and IBM all experiencing high-profile disruptions. AWS services were severely impacted for more than 15 hours when a DNS problem rendered the DynamoDB API unreliable.

In November, a bad configuration file in Cloudflare’s Bot Management system led to intermittent service disruptions across several online platforms. In June, an invalid automated update disrupted the company’s identity and access management (IAM) system, resulting in users being unable to use Google to authenticate on third-party apps.

“The evolving data center architecture is shaped by the shift to more demanding, intricate workloads driven by the new velocity and variability of AI. This rapid expansion is not only introducing complexities but also challenging existing dependencies. So any misconfiguration or mismanagement at the control layer can disrupt the environment,” said Neil Shah, co-founder and VP at Counterpoint Research.

Preparing for the next cloud incident

This is not an isolated incident. For CIOs, the event only reinforces the need to rethink resilience strategies.

In the immediate aftermath when a hyperscale dependency fails, waiting is not a recommended strategy for CIOs, and they should focus on a strategy of stabilize, prioritize, and communicate, stated Jain. “First, stabilize by declaring a formal cloud incident with a single incident commander, quickly determining whether the issue affects control-plane operations or running workloads, and freezing all non-essential changes such as deployments and infrastructure updates.”

Jain added that the next step is to prioritize restoration by protecting customer-facing run paths, including traffic serving, payments, authentication, and support, and, if CI/CD is impacted, shifting critical pipelines to self-hosted or alternate runners while queuing releases behind a business-approved gate. Finally, communicate and contain by issuing regular internal updates that clearly state impacted services, available workarounds, and the next update time, and by activating pre-approved customer communication templates if external impact is likely.”

Shah noted that these outages are a clear warning for enterprises and CIOs to diversify their workloads across CSPs or go hybrid and add necessary redundancies. To prevent future outages from impacting operations, they should also manage the size of the CI/CD pipelines and keep them lean and modular.

Even the real-time vs non-real-time scaling strategy, especially for crucial code or services, should be well thought through. CIOs should also have a clear understanding and operational visibility of hidden dependencies, knowing what could be impacted in such scenarios, and have a robust mitigation plan.

Six reasons to use coding agents 4 Feb 2026, 9:00 am

I use coding agents every day. I haven’t written a line of code for any of my side projects in many weeks. I don’t use coding agents in my day job yet, but only because the work requires a deeper knowledge of a huge code base than most large language models can deal with. I expect that will change.

Like everyone else in the software development business, I have been thinking a lot about agentic coding. All that thinking has left me with six thoughts about the advantages of using coding agents now, and how agentic coding will change developer jobs in the future.

Coding agents are tireless

If I said to a junior programmer, “Go and fix all 428 hints in this code base, and when you are done, fix all 434 warnings,” they would probably say, “Sure!,” and then complain about the tediousness of the task to all of their friends at the lunch table.

But if I were to ask a coding agent to do it, it would do it without a single complaint. Not one. And it would certainly complete the task a hundred times faster than that junior programmer would. Now, that’s nothing against humans, but we aren’t tireless. We get bored, and our brains turn fuzzy with endless repetitive work.

Coding agents will grind away endlessly, tirelessly, and diligently at even the most mind-numbingly boring task that you throw at them.

Coding agents are slavishly devoted

Along those same lines, coding agents will do what you ask. Not that people won’t, but coding agents will do pretty much exactly what you ask them to do. Now, this does mean that we need to be exacting about what we ask for. A high level of fastidious prompting is the next real skill that developers will need. It’s probably both a blessing and a curse, but if you ask for a self-referential sprocket with cascading thingamabobs, a coding agent will build one for you.

Coding agents ask questions you didn’t think to ask

One thing I always do when I prompt a coding agent is to tell it to ask me any questions that it might have about what I’ve asked it to do. (I need to add this to my default system prompt…) And, holy mackerel, if it doesn’t ask good questions. It almost always asks me things that I should have thought of myself.

Coding agents mean 10x more great ideas

If you can get a coding agent to code your idea in two hours, then you could undoubtedly produce many more ideas over the course of a month or a year. If you can add a major feature to your existing app in a day, then you could add many more major features to your app in a month than you could before. When coding is no longer the bottleneck, you will be limited not by your ability to code but by your ability to come up with ideas for software.

Ultimately, this last point is the real kicker. We no longer have to ask, “Can I code this?” Instead, we can ask ourselves, “What can I build?” If you can add a dozen features a month, or build six new applications a week, the real question becomes, “Do I have enough good ideas to fill out my work day?”

Coding agents make junior developers better

A common fear is that inexperienced developers won’t be employable because they can’t be trusted to monitor the output of coding agents. I’m not sure that is something to worry about.

Instead of mentoring juniors to be better coders, we’ll mentor them to be better prompt writers. Instead of saying, “Code this,” we’ll be saying, “Understand this problem and get the coding agent to implement it.”

Junior developers can learn what they need to know in the world of agentic coding just like they learn what they need to know in the world of human coding. With coding agents, juniors will spend more time learning key concepts and less time grinding out boilerplate code.

Everyone has to start somewhere. Junior developers will start from scratch and head to a new and different place.

Coding agents will not take your job

A lot of keyboards have been pounded over concerns that coding agents are coming for developer jobs. However, I’m not the least bit worried that software developers will end up selling apples on street corners. I am worried that some developers will struggle to adapt to the changing nature of the job.

Power tools didn’t take carpentry jobs away; they made carpenters more productive and precise. Developers might find themselves writing a lot less code, but we will be doing the work of accurately describing what needs implementing, monitoring those implementations, and making sure that our inputs to the coding agents are producing the correct outputs.

We may even end up not dealing with code at all. Ultimately, software development jobs will be more about ideas than syntax. And that’s good, right?

AI is not coming for your developer job 4 Feb 2026, 9:00 am

It’s easy to see why anxiety around AI is growing—especially in engineering circles. If you’re a software engineer, you’ve probably seen the headlines: AI is coming for your job.

That fear, while understandable, does not reflect how these systems actually work today, or where they’re realistically heading in the near term.

Despite the noise, agentic AI is still confined to deterministic systems. It can write, refactor, and validate code. It can reason through patterns. But the moment ambiguity enters the equation—where human priorities shift, where trade-offs aren’t binary, where empathy and interpretation are required—it falls short.

Real engineering isn’t just deterministic. And building products isn’t just about code. It’s about context—strategic, human, and situational—and right now, AI doesn’t carry that.

Agentic AI as it exists today

Today’s agentic AI is highly capable within a narrow frame. It excels in environments where expectations are clearly defined, rules are prescriptive, and goals are structurally consistent. If you need code analyzed, a test written, or a bug flagged based on past patterns, it delivers.

These systems operate like trains on fixed tracks: fast, efficient, and capable of navigating anywhere tracks are laid. But when the business shifts direction—or strategic bias changes—AI agents stay on course, unaware the destination has moved.

Sure, they will produce output, but their contribution will either be sideways or negative instead of progressing forward, in sync with where the company is going.

Strategy is not a closed system

Engineering doesn’t happen in isolation. It happens in response to business strategy—which informs product direction, which informs technical priorities. Each of these layers introduces new bias, interpretation, and human decision-making.

And those decisions aren’t fixed. They shift with urgency, with leadership, with customer needs. A strategy change doesn’t cascade neatly through the organization as a deterministic update. It arrives in fragments: a leadership announcement here, a customer call there, a hallway chat, a Slack thread, a one-on-one meeting.

That’s where interpretation happens. One engineer might ask, “What does this shift mean for what’s on my plate this week?” Faced with the same question, another engineer might answer it differently. That kind of local, interpretive decision-making is how strategic bias actually takes effect across teams. And it doesn’t scale cleanly.

Agentic AI simply isn’t built to work that way—at least not yet.

Strategic context is missing from agentic systems

To evolve, agentic AI needs to operate on more than static logic. It must carry context—strategic, directional, and evolving.

That means not just answering what a function does, but asking whether it still matters. Whether the initiative it belongs to is still prioritized. Whether this piece of work reflects the latest shift in customer urgency or product positioning.

Today’s AI tools are disconnected from that layer. They don’t ingest the cues that product managers, designers, or tech leads act on instinctively. They don’t absorb the cascade of a realignment and respond accordingly.

Until they do, these systems will remain deterministic helpers—not true collaborators.

What we should be building toward

To be clear, the opportunity isn’t to replace humans. It’s to elevate them—not just by offloading execution, but by respecting the human perspective at the core of every product that matters.

The more agentic AI can handle the undifferentiated heavy lifting—the tedious, mechanical, repeatable parts of engineering—the more space we create for humans to focus on what matters: building beautiful things, solving hard problems, and designing for impact.

Let AI scaffold, surface, validate. Let humans interpret, steer, and create—with intent, urgency, and care.

To get there, we need agentic systems that don’t just operate in code bases, but operate in context. We need systems that understand not just what’s written, but what’s changing. We need systems that update their perspective as priorities evolve.

Because the goal isn’t just automation. It’s better alignment, better use of our time, and better outcomes for the people who use what we build.

And that means building tools that don’t just read code, but that understand what we’re building, who it’s for, what’s at stake, and why it matters.

—

New Tech Forum provides a venue for technology leaders—including vendors and other outside contributors—to explore and discuss emerging enterprise technology in unprecedented depth and breadth. The selection is subjective, based on our pick of the technologies we believe to be important and of greatest interest to InfoWorld readers. InfoWorld does not accept marketing collateral for publication and reserves the right to edit all contributed content. Send all inquiries to doug_dineley@foundryco.com.

4 self-contained databases for your apps 4 Feb 2026, 9:00 am