Or try one of the following: 詹姆斯.com, adult swim, Afterdawn, Ajaxian, Andy Budd, Ask a Ninja, AtomEnabled.org, BBC News, BBC Arabic, BBC China, BBC Russia, Brent Simmons, Channel Frederator, CNN, Digg, Diggnation, Flickr, Google News, Google Video, Harvard Law, Hebrew Language, InfoWorld, iTunes, Japanese Language, Korean Language, mir.aculo.us, Movie Trailers, Newspond, Nick Bradbury, OK/Cancel, OS News, Phil Ringnalda, Photoshop Videocast, reddit, Romanian Language, Russian Language, Ryan Parman, Traditional Chinese Language, Technorati, Tim Bray, TUAW, TVgasm, UNEASYsilence, Web 2.0 Show, Windows Vista Blog, XKCD, Yahoo! News, You Tube, Zeldman

ongoing by Tim Bray

ongoing fragmented essay by Tim BrayLong Links 3 Feb 2026, 8:00 pm

Welcome to the first Long Links of this so-far-pretty-lousy 2026. I can’t imagine that anyone will have time to take in all of these, but there’s a good chance one or two might brighten your day.

Unclassified

Thomas Piketty is always right. For example, Europe, a social-democratic power.

Lying is wrong. Conservatives do it all the time. To be fair, that piece is about the capital-C flavor, as in the Canadian Tories. But still.

Clothing is open-source: “If you slice the different parts off with a seamripper, lay them all down, trace them on new fabric, cut them out, and stitch them back together, you can effectively clone and fork garments.” From Devine Lu Linvega.

The Universe is weird. The Webb telescope keeps showing astronomers things that shouldn’t be there. For example, An X-ray-emitting protocluster at z ≈ 5.7 reveals rapid structure growth; ignore the title and read the Abstract and Main sections. With pretty pictures!

Music

One time in Vegas, I was giving a speech, something about cloud computing, and was surprised to find the venue an ornate velvet-lined theater. I found out from the staff, and then relayed to the audience, that the last human before me to stand on this stage in front of an audience had been Willie Nelson. I was tempted to fall to my knees and kiss the boards. How Willie Nelson Sees America, from The New Yorker, is subtitled “On the road with the musician, his band, and his family” but it ends up being the kernel of a good biography of an interesting person. Bonus link; on YouTube, Willie Nelson - Teatro, featuring Daniel Lanois & Emmylou Harris, Directed by Wim Wenders. Strong stuff.

Speaking of recorded music, check out Why listening parties are everywhere right now. Huh? They are? As a deranged audiophile, sounds like my kind of thing. I’d go.

Somewhere to put worker bees

When I was working at AWS in downtown Vancouver back starting in 2015, a lot of our junior engineers lived in these teeny-tiny little one-room-tbh apartments. It worked out pretty well for them, they were affordable and an easy walk from the office and these people hadn’t built up enough of a life to need much more room. For a while this trend of so-called-“studio” flats was the new hotness in Vancouver and I guess around quite a bit of the developed world. Us older types with families would look at the condo market and tell each other “this is stupid”.

We were right. The bottom is falling out and they’re sitting empty in their thousands. And not just the teeniest either, the whole condo business is in the toilet. It didn’t help that for a few years all the prices went up every year (until they didn’t) and you could make serious money flipping unbuilt condos, so lots of people did (until they didn’t).

Anyhow, here’s a nice write-up on the subject: ‘Somewhere to put worker bees’: Why Canada's micro-condos are losing their appeal. (From the BBC, huh?)

AI AI AI

Sorry, I can’t not relay pro- and anti-GenAI posts, because that conversation is affecting all our lives just now. I am actually getting ready to decloak my own conclusions, but for the moment I’m just sharing essays on the subject that strike me as well-written and enjoyable for their own sake. Thus ‘AI' is a dick move, redux from Baldur Bjarnason. Boy, is he mad.

Sam Ruby has been doing some extremely weird shit, running Rails in the browser, as in without even a network connection or a Ruby runtime. Yes, AI was involved in the construction.

Software

There’s this programming language called Ivy that is in the APL lineage; that acronym will leave young’uns blank but a few greying eyebrows will have been raised. Anyhow, Implementing the transcendental functions in Ivy is delightfully geeky, diving deep with no awkwardness. By no less than Rob Pike.

Check out Mike Swanson’s Backseat Software. That’s “backseat” as in “backseat driver”, which today’s commercial software has now, annoyingly, become. This piece doesn’t make any points that I haven’t heard (or made myself) elsewhere, but it pulls a lot of the important ones together in a well-written and compelling package. Recommended.

Old Googler Harry Glaser reacts with horror to the introduction of advertising by OpenAI, and makes gloomy predictions about how it will evolve. His predictions are obviously correct.

The title says it: Discovering a Digital Photo Editing Workflow Beyond Adobe. It’d be a tough transition for me, but the relationship with Adobe gets harder and harder to justify.

Indigenous reconciliation

Khelsilem is one of the loudest and clearest voices coming out of the Squamish nation, one of the larger and better-organized Indigenous communities around here.

There has been a steady drumbeat of Indigenous litigation going on for decades as a consequence of the fact that the British colonialists who seized the territory in what we now call British Columbia didn’t bother to sign treaties with the people who were already there, they just assumed ownership. The Indigenous people have been winning a lot of court cases, which makes people nervous.

Anyhow, Khelsilem’s The Real Source of Canada's Reconciliation Panic covers the ground. I’m pretty sure British Columbians should read this, and suspect that anyone in a jurisdiction undergoing similar processes should too.

Resonant computing, Black and Blue sky

There’s this thing called the Resonant Computing Manifesto, whose authors and signatories include names you’d probably recognize. Not mine; the first of its Five Principles begins with “In the era of AI…” Also, it is entirely oblivious to the force driving the enshittification of social-media platforms: Monopoly ownership and the pathologies of late-stage capitalism.

Having said that, the vision it paints is attractive. And having said that, it’s now featured on the flags waved by the proponents of ATProto, which is to say Bluesky. See Mike Masnick’s ATproto: The Enshittification Killswitch That Enables Resonant Computing (Mike is on Bluesky Corp’s Board). That piece is OK but, in the comments, Masnick quickly gets snotty about the Fediverse and Mastodon, in a way that I find really off-putting. And once again, says nothing about the underlying economic realities that poison today’s platforms.

I want to like Bluesky, but I’m just too paranoid and cynical about money. It is entirely unclear who is funding the people and infrastructure behind Bluesky, which matters, because if Bluesky Corp goes belly-up, so does the allegedly-decentralized service.

On the other hand, Blacksky is interesting. They are trying to prove that ATProto really can be made decentralized in fact not just in theory. Their ideas and their people are stimulating, and their finances are transparent. I’ll be moving my ATProto presence to Blacksky when I get some cycles and the process has become a little more automated.

Good crypto

The cryptography community is working hard on the problem of what happens should quantum computers ever become real products as opposed to over-invested fever dreams. Because if they ever work, they can probably crack the algorithms that we’ve been using to provide basic Web privacy.

The problem is technically hard — there are good solutions though — and also politically fraught, because maybe the designers or standards orgs are corrupt or incompetent. It’s reasonable to worry about this stuff and people do. They probably don’t need to: Sophie Schmieg dives deep in ML-KEM Mythbusting.

Books

Here’s one of the most heartwarming things I’ve read in months: A Community-Curated Nancy Drew Collection. Reminder: The Internet can still be great.

John Lanchester’s For Every Winner a Loser, ostensibly a review of two books about famous financiers, is in fact an extended howl of (extremely instructive) rage against the financialization of everything and the unrelenting increase in inequality. What we need to do is to take the ill-gotten gains away from these people and put it to a use — any use — that improves human lives.

I talk a lot about late-stage capitalism. But Sven Beckert published a 1,300-page monster entitled Capitalism; the link is to a NYT review and makes me want to read it..

Charlie Stross, the sci-fi author, likes webtoons and recommends a bunch. Be careful, do not follow those links if you’re already short of time. Semi- or fully-retired? Go ahead!

I have history with dictionaries. For several years of my life in the late Eighties, I was the research project manager for the New Oxford English Dictionary project at the University of Waterloo. Dictionaries are a fascinating topic and, for much of the history of the publishing industry, were big money-makers; they dominate any short list of the biggest-selling books in history. Then came the Internet.

Anyhow, Louis Menand’s Is the Dictionary Done For? starts with a review of a book by Stefan Fatsis entitled Unabridged: The Thrill of (and Threat to) the Modern Dictionary which I haven’t read and probably won’t, but oh boy, Menand’s piece is big and rich and polished and just a fantastic read. If, that is, you care about words and languages. I understand there are those who don’t, which is weird. I’ll close with a quote from Menand:

“The dictionary projects permanence,” Fatsis concludes, “but the language is Jell-O, slippery and mutable and forever collapsing on itself.” He’s right, of course. Language is our fishbowl. We created it and now we’re forever trapped inside it.

Quamina v2.0.0 20 Jan 2026, 8:00 pm

There’ve been a few bugfixes and optimizations since 1.5, but the headline is: Quamina now knows regular expressions. This is roughly the fourth anniversary of the first check-in and the third of v1.0.0. (But I’ve been distracted by family health issues and other tech enthusiasms.) Open-source software, it’s a damn fine hobby.

Did I mention optimizations? There are (sob) also regressions; introducing REs had measurable negative impacts on other parts of the system. But it’s a good trade-off. When you ship software that’s designed for pattern-matching, it should really do REs. The RE story, about a year long, can be read starting here.

Quamina facts

About 18K lines of code (excluding generated code), 12K of which are unit tests. The RE feature makes the tests run slower, which is annoying.

Adding Quamina to your app will bulk your executable size up by about 100K, largely due to Unicode tables.

There are a few shreds of AI-assisted code, none of much importance.

A Quamina instance can match incoming data records on my 2023 M2 Mac at millions per second without much dependence on how many patterns are being matched at once. This assumes not too many horrible regular expressions. That’s per-thread of course, and Quamina does multithreading nicely.

Next?

The open issues are modest in number but some of them will be hard.

I think I’m going to ignore that list for a while (PRs welcome, of course) and work on optimization. The introduction of epsilon transitions was required for regular expressions, but they really bog the matching process down. At Quamina’s core is the finite-automaton merge logic, which contains fairly elegant code but generally throws up its hands when confronted with epsilons and does the simplest thing that could possibly work. Sometimes at an annoyingly slow pace.

Having said that, to optimize you need a good benchmark that pressures the software-under-test. Which is tricky, because Quamina is so fast that it’s hard to to feed it enough data to stress it without the feed-the-data code dominating the runtime and memory use. If anybody has a bright idea for how to pull together a good benchmark I’d love to hear it. I’m looking at b.Loop() in Go 1.24, any reason not to go there?

Book?

It occurs to me that as I’ve wrestled with the hard parts of Quamina, I’ve done the obvious thing and trawled the Web for narratives and advice. And, more or less, been disappointed. Yes, there are many lectures and blogs and so on about this or that aspect of finite automata, but they tend to be mathemagical and theoretical and say little about how, practically speaking, you’d write code to do what they’re talking about.

The Quamina-diary ongoing posts now contain several tens of thousands of words. Also I’ve previously written quite a bit about Lark, the world’s first XML parser, which I wrote and was automaton-based. So I think there’s a case for a slim volume entitled something like Finite-state Automata in the Code Trenches. It’d be a big money-maker, I betcha. I mean, when Apple TV brings it to the screen.

Why?

Let’s be honest. While the repo has quite a few stars, I truly have no idea who’s using Quamina in production. So I can’t honestly claim that this work is making the world better along any measurable dimension.

I don’t much care because I just can’t help it. I love executable abstractions for their own sake.

Losing 1½ Million Lines of Go 14 Jan 2026, 8:00 pm

Confession: My title is clickbait-y, this is really about building on the Unicode Character Database to support character-property regexp features in Quamina. Just halfway there, I’d already got to 775K lines of generated code so I abandoned that particular approach. Thus, this is about (among other things) avoiding those 1½M lines. And really only of interest to people whose pedantry includes some combination of Unicode, Go programming, and automaton wrangling. Oh, and GenAI, which (*gasp*) I think I should maybe have used.

Character property matching

I’m talking about regexp incantations like [\p{L}\p{Zs}\p{Nd}], which matches anything that Unicode classifies

as a letter, a space, or a decimal number. (Of course, in Quamina “\” is “~”

for excellent reasons, so that reads

[~p{L}~p{Zs}~p{Nd}].)

(I’m writing about this now because I just launched a PR to enable this feature. Just one more to go before I can release a new version of Quamina with full regexp support, yay.)

Finding the properties

To build an automaton that matches something like that, you have to find out what the character properties are. This information comes from the Unicode Character Database, helpfully provided online by the Unicode consortium. Of course, most programming languages have libraries that will help you out, and that includes Go, but I didn’t use it.

Unfortunately, Go’s library doesn’t get updated every time Unicode does. As of now, January 2026, it’s still stuck at Unicode 15.0.0, which dates to September 2023; the latest version is 17.0.0, last September. Which means there are plenty of Unicode characters Go doesn’t know about, and I didn’t want Quamina to settle for that.

So, I fetched and parsed the famous master file from

www.unicode.org/Public/UCD/latest/ucd/UnicodeData.txt.

Not exactly rocket science, it’s a flat file with ;-delimited fields, of which I only cared about the first

and third. There are some funky bits, such as the pair of nonstandard lines indicating that the Han characters occur

between U+4E00 and U+9FFF inclusive; but still not really taxing.

The output is, for each Unicode category, and also for each category’s complement (~P{L} matches everything

that’s not a letter; note the capital P), a list of pairs of code points, each pair indicating a subset

of the code space where that category applies. For example, here’s the first line of character pairs with category C.

{0x0020, 0x007e}, {0x00a0, 0x00ac}, {0x00ae, 0x0377},How many pairs of characters, you might wonder? There are 37 categories and it’s all over the place but adds up to a lot. The top three categories are L with 1,945 pairs, Ll at 664, and M at 563. At the other end are Zl and Zp, both with just 1. The total number of pairs is 14,811, and the generated Go code is a mere 5,122 lines.

Character-property automata

Turning these creations into finite automata was straightforward: I already had the code to handle regexps like

[a-zA-Z0-9], logically speaking the same problem. But, um, it wasn’t fast. My favorite unit test, an exercise in

sample-driven development with 992 regexps,

suddenly started taking multiple seconds, and my whole unit-test suite expanded from around ten seconds to over twelve; since I

tend to run the unit tests every time I take a sip of coffee or scratch my head or whatever, this was painful. And it occurred to

me that it would be painful in practice to people who want for some good reason or another to load up a bunch of

Unicode-property patterns into a Quamina instance.

So, I said to myself, I’ll just precompute all the automata and serialize them into code. And now we get to the title of this essay; my data structure is a bit messy and ad-hoc and just for the categories, before I got to the complement versions, I was generating 775K lines of code.

Which worked! But, it was 12M in size and while Go’s runtime is fast, there was a painful pause while it absorbed those data structures on startup. Also, opening the generated file regularly caused my IDE (Goland) to crash. And I was only halfway there. The whole approach was painful to work with so I went looking for Plan B.

The code that generates the automaton from the code point pairs is pretty well the simplest thing that could possibly work and it was easy to understand but burned memory like crazy. So I worked for a bit on making it faster and cheaper, but so far have found no low-hanging fruit.

I haven’t given up on that yet. But in the meantime, I remembered Computer Science’s general solution for all performance problems, by which I mean caching. So now, any Quamina instance will compute the automaton for a Unicode property the first time it’s used, then remember it. So now Quamina’s speed at adding Unicode-property regexps to an instance has increased from 135/second to 4,330, a factor of thirty and Good Enough For Rock-n-Roll.

It’s worth pointing out that while building these automata is a heavyweight process, Quamina can use them to match input messages at its typical rates, hundreds of thousands to millions per second. Sure, these automata are “wide”, with lots of branches, but they’re also shallow, since they run on UTF-8 encoded characters whose maximum length is four and average length is much less. Most times you only have to take one or two of those many branches to match or fail.

Should I have used Claude?

This particular segment of the Quamina project included some extremely routine programming tasks, for example fetching and parsing UnicodeData.txt, computing the sets of pairs, generating Go code to serialize the automata, reorganizing source files that had become bloated and misshapen, and writing unit tests to confirm the results were correct.

Based on my own very limited experience with GenAI code, and in particular after reading Marc Brooker’s On the success of ‘natural language programming’ and Salvatore (“antirez”) Sanfilippo’s Don't fall into the anti-AI hype, I guess I’ve joined the camp that thinks this stuff is going to have a place in most developers’ toolboxes.

I think Claude could have done all that boring stuff, including acceptable unit tests, way faster than I did. And furthermore got it right the first time, which I didn’t.

So why didn’t I use Claude? Because I don’t have the tooling set up and I was impatient and didn’t want to invest the time in getting all that stuff going and improving my prompting skills. Which reminds me of all the times I’ve been trying to evangelize other developers on a better way to do things and was greeted by something along the lines of “Fine, but I’m too busy right now, I’ll just going on doing things the way I already know how to.”

Does this mean I’m joining the “GenAI is the future and our investments will pay off!” mob? Not in the slightest. I still think it’s overpriced, overhyped, and mostly ill-suited to the business applications that “thought leaders” claim for it. That word “mostly” excludes the domain of code; as I said here, “It’s pretty obvious that LLMs are better at predicting code sequences than human language.”

And, as it turns out, the domain of Developer Tools has never been a Big Business by the standards of GenAI’s promoters. Nor will it ever be; there just aren’t enough of us. Also, I suspect it’ll be reasonably easy in the near future for open-source models and agents to duplicate the capabilities of Claude and its ilk.

Speaking personally, I can’t wait for the bubble to pop.

Quamina.next?

After I ship the numeric-quantifier feature, e.g. a{2-5}, Quamina’s regexp support will be complete and if no

horrid bugs pop up I’ll pretty quickly release Quamina 2.0. Regexps in pattern-matching software are a qualitative difference-maker.

After that I dunno, there are lots more interesting features to add.

Unfortunately, a couple years into my Quamina work, I got distracted by life and by other projects, and ignored it. One result is that so did the other people who’d made major contributions and provided PR reviews. I miss them and it’s less fun now. We’ll see.

Regexp Lessons 1 Jan 2026, 8:00 pm

I’m just landing a

chonky PR in

Quamina whose effect is to enable the + and * regexp

features. As in my

last chapter, this is a disorderly war story not an essay, and

probably not of general interest. But

as I said then, the people who care about coercing finite automata into doing useful things at scale are My People (there are

dozens of us).

2014-2026

As I write this, I’m sitting in the same couch in my Mom’s living room in Saskatchewan where, on my first Christmas after joining AWS, I got the first-ever iteration of this software to work. That embryo’s mature form is available to the world as aws/event-ruler. (Thanks, AWS!) Quamina is its direct descendent, which means this story is entering its twelfth year.

Mom is now 95 and good company despite her failing memory. I’ve also arrived at a qualitatively later stage of life than in 2014, but would like to report back from this poorly-lit and often painful landscape: Executable abstractions are still fun, even when you’re old.

Anyhow, the reason I’m writing all this stuff isn’t to expound on the nature of finite automata or regular expressions, it’s to pass on lessons from implementing them.

Lesson: Seek out samples

In a previous episode I used the phrase Sample-driven development to describe my luck in digging up 992 regexp test cases, which reduced task task from intimidating to approachable. I’ve never previously had the luxury of wading into a big software task armed with loads of test cases written by other people, and I can’t recommend it enough. Obviously you’re not always going to dig this sort of stuff up, but give it a sincere effort.

Lesson: Break up deliverables

I decomposed regular expressions into ten unique features, created an enumerated type to identify them, and implemented them by ones and twos. Several of the feature releases used techniques that turned out to be inefficient or just wrong when it came to subsequent features. But they worked, they were useful, and my errors nearly all taught me useful lessons.

Having said that, here’s some cool output that combines this lesson and the one above, from the unit test that runs those test cases. Each case has a regexp then one or more samples each of strings that should and shouldn’t match. I instrumented the test to report the usage of regexp features in the match and non-match cases.

Feature match test counts: 32 '*' zero-or-more matcher 27 () parenthetized group 48 []-enclosed character-class matcher 7 '.' single-character matcher 29 |-separated logical alternatives 16 '?' optional matcher 29 '+' one-or-more matcher Feature non-match test counts: 45 '+' one-or-more matcher 24 '*' zero-or-more matcher 31 () parenthetized group 49 []-enclosed character-class matcher 6 '.' single-character matcher 32 |-separated logical alternatives 21 '?' optional matcher

Of course, since most of the tests combine multiple features, the numbers for all the features get bigger each time I implement a new one. Very confidence-building.

Lesson: Thompson’s construction

This is the classic nineteen-sixties regular-expression implementation by Ken Thompson, described in Wikipedia here and (for me at least) more usefully, in a storytelling style by Russ Cox, here.

On several occasions I rushed ahead and implemented a feature without checking those sources, because how hard could it be? In nearly every case, I had problems with that first cut and then after I went and consulted the oracle, I could see where I’d gone wrong and how to fix it.

So big thanks, Ken and Russ.

Lesson: List crushing

In some particularly nasty regular expressions that

combine [] and ? and + and *, you can

get multiple states connected in complicated ways with epsilon links.

In Thompson’s Construction, traversing an NFA transitions not just from one state to another but from a current set of states

to a next set, repeat until you match or fail. You also compute epsilon closures as you go along; I’m skipping over details

here. The problem was that traversing these pathologically complex regexps with a sufficiently long string was leading to an

exponential explosion in the current-states and next-states sizes — not a figure of speech, I mean

O(2N). And despite the usage of the word “set” above, these weren’t, they contained endless

duplicates.

The best solution would be to study the traversal algorithm and improve it so it didn’t emit fountains of dupes. That would be hard. The next-best would to turn these things into actual de-duped sets, but that would require a hash table right in the middle of the traversal hot spot, and be expensive.

So what I did was to detect whenever the next-steps list got to be longer than N, sorted it, and crushed out all the dupes. As I write, N is now 500 based on running benchmarks and finding a value that makes number go down.

The reason this is good engineering is that the condition where you have to crush the list almost never happens, so in the

vast majority of cases the the only cost is an if len(nextSteps)>N comparison. Some may find this approach

impure, and they have a point. I would at some point like to go back and find a better upstream approach. But for now it’s still

really fast in practice, so I sleep soundly.

Lesson: Hell’s benchmark

I’ve written before about my struggles with the benchmark where I merge 12,959 wildcard patterns together. Back before I was doing epsilon processing correctly, I had a kludgey implementation that could match patterns in the merged FA at typical Quamina speeds, hundreds of thousands per second. With correct epsilon general-purpose epsilon handling, I have so far not been smart enough to find a way to preserve that performance. With the full 13K patterns, Quamina matches at less than 2K/second, and with a mere thousand, at 16K/second. I spent literally days trying to get better results, but decided that it was more valuable to Quamina to handle a large subset of regular expressions correctly than to run idiotically large merges at full speed.

I’m pretty sure that given enough time and consideration, I’ll be able to make it better. Or maybe someone else who’s smarter than me can manage it.

Question: What next?

The still-unimplemented regexp features are:

{lo,hi} : occurrence-count matcher

~p{} : Unicode property matcher

~P{} : Unicode property-complement matcher

[^] : complementary character-class matcher

Now I’m wondering what to do next. [^] is pretty easy I think and is useful, also I get to invert a

state-transition table. The

{lo,hi} idiom shouldn’t be hard but I’ve been using regexps for longer than most of you have been alive and have

never felt the need for it, thus I don’t feel much urgency. The Unicode properties I think have a good fun factor just because

processing the Unicode character database tables is cool. And, I’ve used them.

[Update: the [^] thing took a grand total of a couple of hours to build and test. Hmm, what next?]

Lesson: Coding while aging

I tell people I keep working on code for the sake of preserving my mental fitness. But mostly I do it for fun. Same for writing about it. So, thanks for reading.

Humanist Plumbing 18 Dec 2025, 8:00 pm

What happened was, a faucet started dripping. And then I managed to route around the malignant machineries of late-stage capitalism. These days, that’s almost always a story worth telling.

Normally, faced with a drip, we’d pull out the old cartridge, take it to the hardware store, and buy another to swap in. But the fixture in our recently-acquired place was kind of exotic and abstract and neither of us could figure it out. We were gloomy because we’ve had terrible luck over the years with the local plumbing storefronts. So…

The neighbors

Our neighborhood has an online chat; both sides of the streets in a square around a single city block. This is our first experience with such a thing. I gather that these don’t always work out well, but this one has been mostly pretty great.

So at 9AM I posted “Hey folks, looking for a plumber recommendation” and by ten there were three.

[Late-stage capital: “You’re supposed to ask the AI in your browser. It’ll provide a handy link to a vendor based on geography and reviews but mostly advertising spend. Why would you want to talk to other people?”]

Thomas

I picked a neighbor’s suggestion, first name Thomas, and called the number. “Thomas here” on the second ring. I explained and he said “What’s the brand name? If it’s one of those Chinese no-names I probably have to replace the whole thing.” I said “No idea, but I’ll take a picture and send it to you.”

Turns out the brand was Riobel, never heard of them. I texted Thomas a picture and he got right back to me: “That’s high-end, it’s got a lifetime warranty. I’ll come by a little after 5 and take it out. Their dealer is in PoCo (an outer suburb) and I live out there, so I can swap it.”

[Late-stage capital: “You’re supposed to engage with the chatbot on the site we sent you to, which will integrate with your calendar and arrange for a diagnostic visit a week from Wednesday. You could call them but you’d be in voice-menu hell and eventually end up at the same chatbot.”]

When Thomas showed up, with a sidekick, he was affable and obviously competent. Within five minutes I was glad I’d called for help; that faucet’s construction was highly non-obvious. Thomas knew what he was doing and it still took him the best part of a half-hour to get it all disassembled and the cartridge extracted.

Nothing’s perfect

The next morning, a text from Thomas: “Bad news. That part is back-ordered till May at Riobel’s dealer. We can maybe get it online but you’d have to pay.” He attached a screenie of a Web search for the part number; there were several online vendors.

It’s irritating that the “lifetime warranty” doesn’t seem to be helping me, but to be fair, the part was initially installed in 2011.

[Late-stage capital: “Lifetime warranty? Huh? Oh, of course you mean our VIP-class subscription offering, that’s monthly with a discount for annual up-front payment.” (And the part would still be back-ordered.)]

Kolani

High-end plumbing parts are expensive. But one of Thomas’ recommendations, Kolani Kitchen and bath, was asking less.

They had a phone number on their Web site so I called it and the voice menu only had three options: Location, opening hours, and Operator. The operator was an intelligent human and picked up right away. “Hi, I’m looking at ordering a cartridge and wanted to see if you had it in stock.” “Gimme the part number?” I did and she went away for a couple of minutes and came back “Yeah, it’s in stock.” So I ordered it and I have a tracking number.

[Late-stage capital: “There aren’t supposed to be independent dealers; the manufacturer has taken PE money and eliminated the middlemen to better capture all the value-add. Or maybe there’ll be a wholesaler, but just one, because another PE rolled up all the distributors to maximize pricing power. Either way, you won’t be able to get a human on the line.”]

Payment

Next morning, I got an email that my order had been canceled. So I called that intelligent operator and she said “I was going to email you. Our system won’t take payment if the billing and delivery addresses are different. Sorry. If you’re in a hurry you could do an e-transfer?” (In Canada, all the banks are in a payments federation that makes this dead easy.) So I did and the part has shipped.

[Late-stage capital: “What? If you’d used our Integrated online payment offering, it would all just work. What if it doesn’t, you ask? Yeah, sucks to be you in that situation, of course there’d be nobody you could talk to about that.”]

Thomas came by again to put the old cartridge back in for now and said “This thing, don’t worry about a drip, it’s not fast and it probably won’t get worse. Text me when the new part gets in.”

Late-stage capitalism

It won’t be missed when it’s gone.

After the Bubble 7 Dec 2025, 8:00 pm

The GenAI bubble is going to pop. Everyone knows that. To me, the urgent and interesting questions are how widespread the damage will be and what the hangover will feel like. On that basis, I was going to post a link on Mastodon to Paul Krugman’s Talking With Paul Kedrosky. It’s great, but while I was reading it I thought “This is going to be Greek to people who haven’t been watching the bubble details.” So consider this a preface to the Krugman-Kedrosky piece. If you already know about the GPU-fragility and SPV-voodoo issues, just skip this and go read that.

Depreciation

When companies buy expensive stuff, for accounting purposes they pretend they haven’t spent the money; instead they “depreciate” it over a few years. That is to say, if you spent a million bucks on a piece of gear and decided to depreciate it over four years, your annual financials would show four annual charges of $250K. Management gets to pick your depreciation period, which provides a major opening for creative accounting when the boss wants to make things look better or worse than they are.

Even when you’re perfectly honest it can be hard to choose a fair figure. I can remember one of the big cloud vendors announcing they were going to change their fleet depreciation from three to four years and that having an impact on their share price.

Depreciation is orderly whether or not it matches reality: anyone who runs a data center can tell you about racks with 20 systems in them that have been running fine since 2012. Still, orderly is good.

In the world of LLMs, depreciation is different. When you’re doing huge model-building tasks, you’re running those expensive GPUs flat out and red hot for days on end. Apparently they don’t like that, and flame out way more often than conventional computer equipment. Nobody who is doing this is willing to come clean with hard numbers but there are data points, for example from Meta and (very unofficially) Google.

So GPUs are apparently fragile. And they are expensive to run because they require huge amounts of electricity. More, in fact, than we currently have, which is why electrical bills are spiking here and there around the world.

Why does this matter? Because when the 19th-century railway bubble burst, we were left with railways. When the early-electrification bubble burst, we were left with the grid. And when the dot-com bubble burst, we were left with a lot of valuable infrastructure whose cost was sunk, in particular dark fibre. The AI bubble? Not so much; What with GPU burnout and power charges, the infrastructure is going to be expensive to keep running, not something that new classes of application can pick up and use on the cheap.

Which suggests that the post-bubble hangover will have few bright spots.

SPVs

This is a set of purely financial issues but I think they’re at the center of the story.

It’s like this. The Big-Tech giants are insanely profitable but they don’t have enough money lying around to build the hundreds of billions of dollars worth of data centers the AI prophets say we’re going to need. Which shouldn’t be a problem; investors would line up to lend them as much as they want, because they’re pretty sure they’re going to get it back, plus interest.

But apparently they don’t want to borrow the money and have the debts on their balance sheet. So they’re setting up “Special Purpose Vehicles”, synthetic companies that are going to build and own the data centers; the Big Techs promise to pay to use them, whether or not genAI pans out and whether or not the data centers become operational. Somehow, this doesn’t count as “debt”.

The financial voodoo runs deep here. I recommend Matt Levine’s Coffee pod financing and the Financial Times’ A closer look at the record-smashing ‘Hyperion’ corporate bond sale. Levine’s explanation has less jargon and is hilarious; the FT is more technical but still likely to provoke horrified eye-rolls.

If you think there’s a distinct odor of 2008 around all this, you’d be right.

If the genAI fanpholks are right, all the debt-only-don’t-call-it-that will be covered by profits and everyone can sleep sound. Only it won’t. Thus, either the debts will apply a meat-axe to Big Tech profits, or (like 2008) somehow they won’t be paid back. If whoever’s going to bite the dust is “too big to fail”, the money has to come from… somewhere? Taxpayers? Pension funds? Insurance companies?

Paul K and Paul K

I think I’ve set that piece up enough now. It points out a few other issues that I think people should care about. I have one criticism: They argue that genAI won’t produce sufficient revenue from consumers to pay back the current investment frenzy. I mean, they’re right, it won’t, but that’s not what the investors are buying. They’re buying the promise, not of more revenue, but of higher profits that happen when tens of millions of knowledge workers are replaced by (presumably-cheaper) genAI.

I wonder who, after the loss of those tens of millions of high-paid jobs, are going to be the consumers who’ll buy the goods that’ll drive the profits that’ll pay back the investors. But that problem is kind of intrinsic to Late-stage Capitalism.

Anyhow, there will be a crash and a hangover. I think the people telling us that genAI is the future and we must pay it fealty richly deserve their impending financial wipe-out. But still, I hope the hangover is less terrible than I think it will be.

Tracy Numbers 2 Dec 2025, 8:00 pm

Here’s a story about African rhythms and cancer and combinatorics. It starts a few years ago when I was taking a class in Afro-Cuban rhythms from Russell Shumsky, with whom I’ve studied West-African drumming for many years. Among the basics of Afro-Cuban are the Bell Patterns, which come straight out of Africa. The most basic is the “Standard Pattern”, commonly accompanying 12/8music. “12/8” means there are four clusters of three notes and you can count it “one-two-three two-two-three three-two-three four-two-three”. It feels like it’s in four, particularly when played fast.

Here’s the standard bell pattern in music notation. Instead of one 12/8 bar, I’ve broken it into four 3/8 chunks. Let’s call those “mini-measures”; I’ll use that or just “minis” in the rest of this piece.

Bell patterns are never played in isolation, but circularly on fast repeat, so the first note immediately follows the last.

In the sound sample, I’m playing a background beat on a conga, emphasizing the beginning of the 12/8 measures. The actual bell pattern is on the high “child” bell of a Gankoqui, an African dual-cowbell set.

“þ” the cat was trying bell patterns but

unfortunately

cats can’t count as high as 12. Collar by

BirdsBeSafe.com.

That’s my Gankoqui. I bought it off someone on Etsy who imports them from Ghana. It came with that little thin stick that sounds nice, but sometimes I use a regular drumstick when things get loud.

The problem

Russell’s a good teacher and the standard pattern isn’t that tricky, but I just couldn’t get a grip on it. It’s a little harder than it looks what with cycling it really fast, and then you’re playing it against complicated music with other instrumental voices. I probably would have got there, but the lessons ran out of gas in the depths of Covid.

Introducing Tracy

She was Russell’s long-time partner, a good person and good drummer too. When you were struggling with a complex rhythm it was helpful to watch Tracy’s hands, because she was always on the beat.

Tracy lived with stage four metastatic cancer for many years and braved endless awful rounds of therapy while remaining generally cheerful. She could be morbidly funny; I bought her congas (you can hear one behind the beat in the samples) when she had a storage-space problem. She told me she was carefully planning her finances so she’d run out of money just before the cancer got her.

I always enjoyed any time I spent with her. Then, a dozen years into her cancer journey, this last summer it got into her brain and it was pretty clear her end times were upon her.

The hospice

Tracy’s last months were spent at St. John Hospice in Vancouver’s far west. I can’t say enough good things about it. If you’re near Vancouver and your death becomes imminent, try to be there if you can’t be at home. It’s comfortable and the staff are expert and infinitely kind. The rules that apply at hospices are different from those at hospitals; for example, Tracy’s cat joined her in residency and had the run of the place.

I (and other fans of Russell and Tracy) visited the hospice a few times. My last visit was just days before her death and, while she was fatigued and spaced-out, it was still Tracy. I wasn’t close enough to call her a friend, but I miss her.

We got to talking about Afro-Cuban music and I laughed at myself, saying how I never could get that damn bell pattern down. Said Russell: “Oh, you mean the standard 12/8 pattern? Tracy, let’s show him” and on the second try, they were doing it together, just voices, ta ta ta-ta, ta ta ta.

Driving home from the hospice, I told myself that if Tracy could manage the bell pattern in her condition, I could bloody well learn it. So I studied the details and used a metronome app and after a while I thought I had it down pretty well.

Sounds cool

I go to a weekly by-invitation African drum jam where I’m on the weaker end of the skill spectrum. The first time a 12/8 came along after I thought I’d learned the pattern, I had to summon up courage and then I fluffed the first few bars. But after a while I was grooving along and smiling and thinking the bell sounded pretty cool against the thunder of all the djembés and dununs.

And, even played amateurishly, it does sound cool. Let’s have another look at the music.

West-African drumming often tries to achieve rhythmic tension, where a given note could fit in multiple ways and your ear is not 100% sure what’s going on. The standard pattern does this, twice.

Remember, I said that 12/8 sounds like it’s “in four”, especially if you hit the first beat of each of the four mini-measures. But two of the four minis here go around the first note, weakening the 4/4 feel. Especially on that third mini; you can feel the beat slide by the missing “one”.

Also, the last three notes are evenly spaced two beats apart, so six of them would fill the 12-beat pattern, suggesting that this might be in triple time, not 12/8.

The effect, to my ears, is of the bell, higher-pitched than the drums, shifting against the rhythm, or even dancing across it. At the drum jam, at almost any given moment it won’t be just drums, one or more people will have clave sticks or rattles or tambourines or cowbells weaving through the beat.

Mixing it up

After I felt confident playing the standard pattern, it still sounded cool, but I wanted to branch out, not just go around and around the same seven notes. So the first thing I did was start mixing in a few of these.

This repeats the second bar through the end of the phrase. In the sound sample I mix it up with the standard pattern. It’s got less rhythmic tension but on the other hand flows along smoothly with the drum thunder. Also you don’t have to think at all, so you can enjoy listening to what the other people are playing.

Then I got a little more ambitious and reshuffled:

The mini-measures are the same as in the standard pattern, just in a different order. Anyhow, this kind of thing is fun.

Combinatorics

Then one evening I was lying in bed, thoughts wandering, and wondered “How many bell patterns are there?” A little mental math showed that of course there are eight possible arrangements of tones in a 3-note mini-measure. Here they are:

I’ll use the boxed numbers to identify the minis.

Why are the minis numbered in that order? Every computer programmer looking at this already knows, but for the rest of you: If the notes are ones and the rests are zeroes, they are the eight binary numbers between zero and seven inclusive. So each number’s binary bits show where the drumstrokes are. By the way, numbers four through seven have a note on the one beat, zero through three don’t.

Is it weird to have a zero i.e. silent mini? I don’t think so, sometimes spaces between the notes really matter.

Patterns

Anyhow, the original question was about the number of different bell patterns. Each has four mini-measures with 8 possible values. So the answer is 8 ⨉ 8 ⨉ 8 ⨉ 8, which is 4,096.

And each of them can be identified by four little numbers, ranging from T0000 (I can hear the bandleader yelling “gimme zeroes for the sax break”) to T7777, a flurry of eighth notes that you might use in the big encore-number finish designed to leave the audience yelling as you walk off stage. The standard bell pattern is T5325; in binary “101 011 010 101” and the 1’s are drumstrokes. The first variation above is T5333 and the second is T5253.

The “T” in front of each bell pattern number is for Tracy.

If you go look at the Wikipedia Bell-pattern article, they emphasize that there are lots of different patterns. Now they all have numbers! The article makes special mention of T5124, T5221, and T5244.

But why, Tim?!

I’m a computer programmer with a Math degree, and an amateur musician. Anyone who thinks that these are disjoint disciplines is wrong. And, I think the notation is (on a very small scale) kind of pleasing.

But the work has actually helped me. Now that I’ve considered each mini-measure and its personality. I find all of them sneaking into my Gankoqui excursions, which have gotten noticeably weirder, for example T5635. Nobody’s threatened to kick me out of the jam, so far.

Also, this has given me a real appreciation of whoever it was that, probably thousands of years ago and certainly in Africa, picked the “standard” pattern as, well, standard. Because it’s great.

What’s missing?

You may have noticed that Gankoquis have two bells and I’ve been ignoring that fact. Normally you’d play these patterns on the smaller “child” bell, but sometimes bringing the big parent bell in for a couple of strokes works well. Here’s an example (h/t Russell).

Also, this discussion has been limited to 3/8 minis in 12/8 measures. There’s another whole universe of 4/4 rhythms that also have bell patterns (but everything exists in the shadow of the clave rhythm). In that world a pattern has four measures, each of which can have sixteen possible values, so there are 65,536 different ones.

And I could repeat the numbers construction above for 4/4. But I’m not going to, because the rewards feel smaller. In my experience, 4/4 rhythms lope smoothly along and everyone knows where the one is even when there’s no note on it, so there’s less ambiguity to work with. Anyhow, any neophyte (like for example me) can play a pretty smooth bell line against 4/4; just start with clave and add variations (or don’t) and you’ll be fine.

Useful?

These numbers are just elementary mathemusical fun. If anyone else wanted to use them that’d be a pleasant surprise. If “anyone else” is you, go ahead, but they have a name and you have to use it. These are called Tracy Numbers.

Colophon

Music fragments by MuseScore Studio. Sound samples facilitated by GarageBand, a Shure MV51, and PSB Alphas.

Fútbol Joy 23 Nov 2025, 8:00 pm

Last Saturday I had one of my peak 2025 experiences, at the MLS semifinal between the Vancouver Whitecaps FC (hereinafter “Caps”) and Los Angeles FC (“LAFC”). Both those FC’s stand for “Fútbol Club”. 53,095 other fans were there with my son and I; we came home smiling. I’d like to share a bit of the joy and an unexpected side-effect: I’ve gone off most other TV sports.

Earlier this year I wrote about becoming a Caps fan. Anyone who enjoys this will probably like that piece too.

Let’s set the scene with pictures.

Supporters gather pre-game at a nearby Irish-flavored pub.

Supporters march to the game.

When you’re in a frivolous parade, everyone smiles

at

you, even the drivers hemmed in by paraders.

The banner is what’s called a

tifo.

I have to admit I failed to parse it.

The game

It was 120 minutes of ridiculously over-the-top psychodrama. I was exhausted at the end. If you want a full retrospective, type “whitecaps lafc” into any Web search to get the particulars. Or hit this. But here’s the short version:

The Caps came out sharp and fast and had LAFC pretty well flummoxed through most of the first half, scoring two goals, one of which was a jaw-dropper. They also managed to contain LAFC’s superstar Son Heung-min.

In the second half, the visitors reconfigured and were much better. Son did the superstar thing and scored two goals, one another jaw-dropper, just as the game was ending, to tie things up.

The Caps lost two players, one for fouls, one to injury, and were down two men through most of overtime. LAFC’s assault was relentless but the Caps held on, getting more than a little lucky.

So it went to a penalty-kick competition. Son, shockingly, missed; he was exhausted and limping. At the end of the day the Caps scored four out of five to LAFC’s three and got the win.

Suffice it to say that the dramatic peak wasn’t any of the flashes of brilliance, but rather the errors that happen when people are at the limit of their endurance. Vancouver made one fewer, that’s all there was to it.

That sound

There’s not another like it on this planet. I mean tens of thousands of voices in a big stadium greeting a home goal. Fútbol specifically because its goals, compared to North American sports where points come in dozens, are such huge markers. The sound-pressure wavefront, coming from every direction, of all those inarticulate howls of joy, all in the same tiny fraction of a second, is a whole-body experience.

The side effect

I’ve long been a televised-sports fan; the only TV show that isn’t scripted and thus has real drama. But since getting mixed up in fútbol, I’ve sort of gone off the other sports I used to watch. I had to think a bit to figure out why.

It’s the ads. The football, basketball and hockey broadcasts screech to a halt every ten or fewer minutes for a couple of minutes of advertising. Most of the ads are dumb, many are offensive, and the relentless addiction-promoting gambling pitches are both. On top of which there’s the bone-headed repetition; someone somewhere thinks I’m gonna lean toward this generic SUV as opposed to that one because of the 138th time they’ve run that commercial where hip young people with fulfilling lives are going to have sex because of its dashboard geometry and motorized hatchback. I mean, the other SUVs have that stuff too, but this one’s actors are more convincingly likely to be headed for bed?

Being in the room with this shit makes me angry even though my mute-button skillz are sharp. The world being what it is, I really don’t need to be around something that angers me several times per hour. So, while when young I loved playing both football and basketball, and while the pro games are good entertainment, my patience seems to be running out.

Fútbol, on the other hand, has two fiftyish-minute chunks of continuous action, so you can sink into the flow of the game. The contrast, switching back and forth between that and the other North American sports, is stark.

(Except for I’m mostly forgiving baseball because its natural rhythm is full of stops suitable for hot dogs and beer and admiring cute babies and T-shirts and discussions of etymology and epidemiology. I mean, the ads are still mindless repetitive shit, we’re just more accustomed to switching attention away when nothing’s happening.)

And I’m not claiming that fútbol is more virtuous or less dirty than any other sport; after all, its global organizing body FIFA has repeatedly been exposed as galactically corrupt. I’m just saying it offers a better experience.

Soccer?

That word is an awkward invention by nineteenth-century British toffs based on abbreviating “association”. I accept that on my continent “football” means gridiron, but people who enjoy “soccer” still say it so in print I offer “fútbol”, which is a typographically nice little cluster; please humor me.

Anyhow, you might want to check out your local team; in North America, the prices are lower than the other sports, who could be against cheap happiness with fewer gambling ads?

Long Links 16 Nov 2025, 8:00 pm

As usual, offering more long-form stuff here than any one person should be expected to read all of, hoping that a few of the choices will improve your day. This time out it’s almost all politics, but that’s the way the world is. And some of the politics aren’t American!

Some of these are paywalled, sorry. And the whole thing is kind of long so if you’re in a hurry, you might want to jump to the last section, entitled Wonderful things.

Social media

Here’s a real treat. Perhaps the premiere example of a blog that’s grown happily from a one-man operation to a successful and sustainable small business would be Talking Points Memo. It provides what I think is about the deepest carefully-reported coverage of the American progressive scene available anywhere. I’ve been a subscriber for years.

They’re celebrating their 25th anniversary and, to celebrate, have been working on a history of blogging: Pivots, Trolls, & Blogrolls. Contributors so far: Sarah Jaffe, Matt Pearce, Brian Beutler, Kylie Cheung, Megan Greenwell, David Weigel, Jon Allsop, Adam Mahoney, Julianne Escobedo Shepherd, Max Rivlin-Nadler, Bhaskar Sunkara, Hamilton Nolan, Ana Marie Cox, Marisa Kabas, Kelly Weill, Aurin Squire, Marcy Wheeler, Andrew Parsons, Jeet Heer, and Sarah Posner.

It’s full of razor-sharp insights and nostalgic smiles. I think it’s paywalled? If so, and if you’ve got feels about the blog form, it’d probably be worth your while to sign up for a month just to read this collection.

And thanks to Josh Marshall of TPM for putting it together all these years and especially for this particular mind candy.

Politics (US)

The Trump administration is, generally speaking, strange. For a terrifying trip several standard deviations off the strange end of the strangeness, there’s Laura Loomer’s Endless Payback. At several points in my traversal of this piece I simply could not believe what I was reading. My guess is that it will regularly be cited by historians of the 23rd century, to add spice to their narrative.

Many commentators on the right have been horrified at Zohran Mamdani’s New York victory, seeing him as the white-hot pointy end of the “woke mind virus”. Well, what could be more woke, I ask, than the Department of Africana Studies at Bowdoin, a boutique liberal-arts college in Maine?

Mamdani was a student there, and now the former head of said department offers us Maybe Don’t Talk to the New York Times About Zohran Mamdani. Peter Coviello is not a moderate but is a formidable writer: “The storied choice between socialism and barbarism was made exquisitely clear a good many years ago in the United States, and both major parties chose barbarism.” Despite that, it’s mostly written with a light touch, often amusing. Read it.

Politics (Other)

It’s not in the news much, but China is facing colossal economic challenges. Learn about “meijuan” (in English, “involution”) which might well put the nation on the path of deflation. That’s a path that, once an economy is on it, is very hard to get off; it took Japan decades.

While we’re looking at China… there used to be “Kremlinology”, a study of the entirely opaque workings of the top inner circles of the Soviet regime. In that spirit, here’s some 人民大会堂-ology: Forever Xi Jinping? Perhaps Not. I enjoyed it but have no idea if it’s, you know, correct. Nobody outside that inner circle does.

Now let’s jump seven time zones west, to France, where the redoubtable Thomas Piketty offers Le Pen’s RN has become the party of billionaires. Unlike the Beijing piece, Piketty’s is (as usual for him) supported by concrete data and I tend to believe it.

Last stop: Gaza, waiting to find out if an externally-imposed and leaky ceasefire will hold, and whether there is a path from where they are to something better. As for “where they are”, here’s The Gaza I Knew Is Gone by Ghada Abdulfattah. It describes life’s experience for the citizens of Gaza after all these endless months of brutality.

Politics (everywhere)

Pope Leo’s Apostolic Exhortation Dilexi Te of the Holy Father Leo XIV To All Christians On Love For The Poor got a short-lived run in the headlines, accompanied by “But that’s… socialism!” pearl-clutching from Stage Right. It deserves a read. The first three-quarters or so are a trip through Church history, starting in Nazareth, aimed at showing that concern for the poor has always been central to the faith. Which is fine, but eventually the Pope gets concrete about the twenty-first century, and is convincing whether or not you believe in any gods.

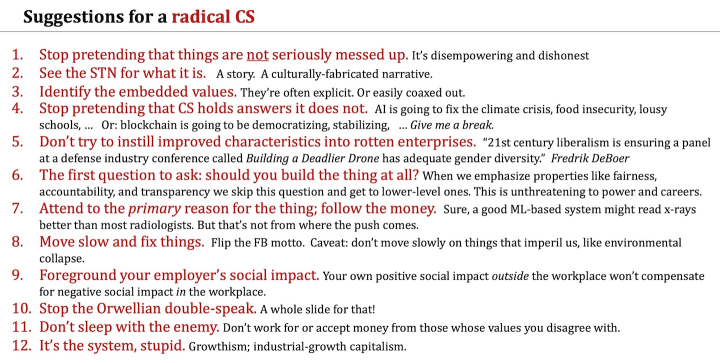

The next section is about Tech and while Radical CS is a presentation from “The Third NIST Workshop on Block Cipher Modes of Operation” it’s about politics, oh yes it is. We very much need more of this kind of thing.

Tech

A post from David Chisnall on Mastodon contains this gem: “Machine learning is amazing if … the value of a correct answer is much higher than the cost of an incorrect answer.” Which is one of those things that’s obviously true as soon as you hear it. The whole piece is good.

The churning debate around AI is full of arm-waving and relatively free of objective numerical data. So here’s Ed Zitron’s This Is How Much Anthropic and Cursor Spend On Amazon Web Services. And I ask: How do the people making these investments think they’re going to get their money back?

Speaking of things are obviously true: TextEdit and the Relief of Simple Software. We could do so much better than we do, and in our hearts we know why we’re not, and it’s about money and power, not technology.

Bluesky has brought its AT Protocol to the IETF; let’s see what happens. Here are Authenticated Transfer: Architecture Overview and Authenticated Transfer Repository and Synchronization. The drafts have source-code repos.

Finally, here’s Spinel, which is trying to build a decentralized, transparent, and somewhat-democratic engineering resource for the Ruby and Rails platforms. This follows on a distinctly-stinky power play where people with money grabbed the steering wheel. Best of luck to Spinel.

Canadian health

Canada has “single-payer” public healthcare that comes with your taxes, which in my experience works well, see here and here.

This costs a lot of money, over $300B/year. Predictably, there are business types panting with eagerness to get their hands on some of that money. And they can; a lot of medical practices and clinics and labs are owned by private companies, apparently doing well while being restricted to billing the government for the standard fees. But what they really want is a two-tier system where they can directly charge rich people more for better care. It seems like every year someone thinks up a clever dodge to nibble away at the system. Fortunately, these people have, so far, ended up losing in the courts.

Here’s a news story: AI Could Save Canada’s Health-Care System. It’s actually pretty coherent and I found it plausible that this technology could maybe improve, for example, the agonizingly slow emergency-room experience.

But then I got curious as to the business angle and tracked the source of the story down to a business called called maple. Sure enough, here they are trying to siphon off direct payments for access to doctors. I predict they’ll end up in court, and lose.

And then there’s the government of Alberta, run by hard-right dipshits who hate most aspects of being Canadian and loathe the proposition that government can provide good and efficient services. Thus: Alberta government plans to allow residents to privately pay for any diagnostic or screening service. Feaugh. I hope this one ends up in court too.

Paying for Open Source

It is coming to the world’s attention that most of our tech infrastructure is radically dependent on open-source software maintenaned by a cadre of developers who are old, tired, and not getting paid for their work. I have direct experience with trying unsuccessfully to convince Big-Tech business leaders to invest in Open-Source maintenance and infrastructure. Here’s some of the coverage:

In The Register: Open source maintainers underpaid, swamped by security, going gray.

Open Source Security Foundation: Open Infrastructure is Not Free: A Joint Statement on Sustainable Stewardship.

Nils Adermann: A Call for Sustainable Open Source Infrastructure.

Hackernoon: How Can Governments Pay Open Source Maintainers?

The New Stack: FFmpeg to Google: Fund Us or Stop Sending Bugs.

Things obviously can’t go on as they are. The best path forward isn’t obvious to me, but we need to start finding it.

Economics and life

Paul Krugman and Martin Wolf, two really smart guys who aren’t technologists, talk about the impact of AI. Technofeudalism is considered carefully. My favorite out-take is from Krugman: “[I] have come to the conclusion that anything that I want to believe about the prospects of AI and its economic effects, all I need to do is do a little searching, and I can find some expert who will tell me whatever it is I want to believe.” Even with that well-justified cynicism, there is deep stuff here.

I read Matt Levine’s newsletter almost every day; not only does it teach you things about how money makes the world go round, it entertains; his glee at some particularly juicy financial swindle or clever arbitrage maneuver will bring a smile to your face. In Money Stuff: Quantum Bond Trading he addresses a deeper question: Does the Finance business actually benefit society? Obviously a subject worthy of attention, and he makes it amusing.

Wonderful things

The dude who wrote Paddling the Darien is clearly crazy, I guess it’d be more polite to say “insanely brave”. Anyhow, I think there are very few people who won’t be astonished at these pictures and stories

I’m not at all sure what A. Inventions, by Jonathan Hoefler, is, actually. The link that I followed said that GenAI image generators were involved. I don’t care.

Alignment Calendars 1584–1811,

from Jonathan Hoefler’s Inventions.

Let’s end with music. Here are two absolutely exquisite song performances, courtesy of YouTube. First, Old Enough by the Raconteurs, Ricky Skaggs, and Ashley Monroe. The interplay of voices and strings is magical. Then Billy Strings wiith his band and string players, doing Gild the Lily. Just a lovely performance of a fine new song.

That’s all, folks, see you next time.

Kendzior Case-Study 13 Nov 2025, 8:00 pm

There was recently a flurry of attention and dismay over Sarah Kendzior having been suspended from Bluesky by its moderation system. Since the state of the art in trust and safety is evolving fast, this is worth a closer look. In particular, Mastodon has a really different approach, so let’s see how the Kendzior drama would have played out there.

Disclosures

I’m a fan of Ms Kendzior, for example check out her recent When I Loved New York; fine writing and incisive politics. I like the Bluesky experience and have warm feelings toward the team there, although my long-term social media bet is on Mastodon.

Back story

Back in early October, the Wall Street Journal published It’s Finally Time to Give Johnny Cash His Due, an appreciation for Johnny’s music that I totally agreed with. In particular I liked its praise for American IV: The Man Comes Around which, recorded while he was more or less on his deathbed, is a masterpiece. It also said that, relative to other rockers, Johnny “can seem deeply uncool”.

Ms Kendzior, who is apparently also a Cash fan and furthermore thinks he’s cool, posted to Bluesky “I want to shoot the author of this article just to watch him die.” Which is pretty funny, because one of Johnny’s most famous lyrics, from Folsom Prison Blues, was “I shot a man in Reno just to watch him die.” (Just so you know: In 1968 Johnny performed the song at a benefit concert for the prisoners at Folsom, and on the live record (which is good), there is a burst of applause from the audience after the “shot a man” lyric. It was apparently added in postproduction.)

Subsequently, per the Bluesky Safety account ”The account owner of @sarahkendzior.bsky.social was suspended for 72 hours for expressing a desire to shoot the author of an article.”

There was an outburst of fury on Bluesky about the sudden vanishing of Ms Kendzior’s account, and the explanation quoted above didn’t seem to reduce the heat much. Since I know nothing about the mechanisms used by Bluesky Safety, I’m not going to dive any deeper into the Bluesky story.

On Mastodon

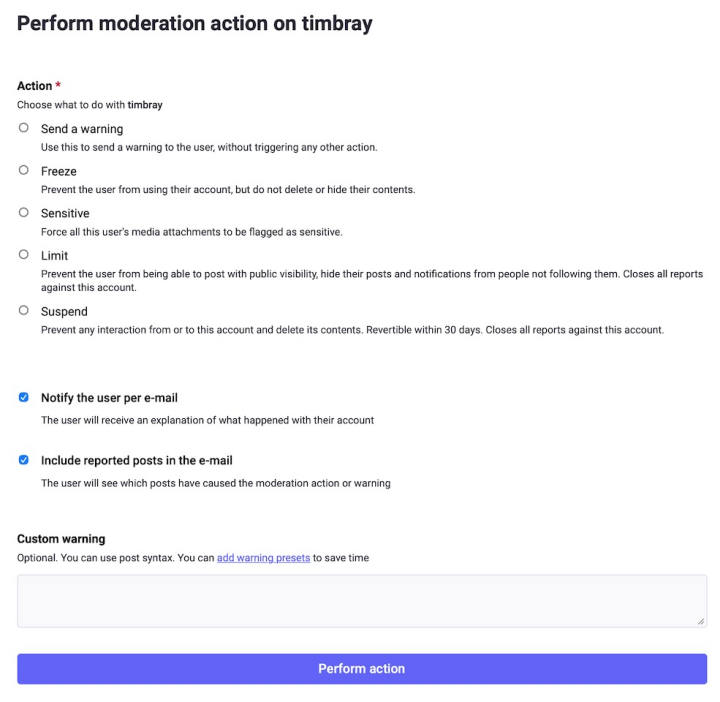

I do know quite a bit about Mastodon’s trust-and-safety mechanisms, having been a moderator on CoSocial.ca for a couple of years now. So I’m going to walk through how the same story might have unfolded on Mastodon, assuming Ms Kendzior had made the same post about the WSJ article. There are a bunch of forks in this story’s path, where it might have gone one way or another depending on the humans involved.

Reporting

The Mastodon process is very much human-driven. Anyone who saw Ms Kendzior’s post could pull up the per-post menu and hit the “Report” button. I’ve put a sample of what that looks like on the right, assuming someone wanted to report yours truly.

By the way, there are many independent Mastodon clients; some of them have “Report” screens that are way cooler than this. I use Phanpy, which has a hilarious little animation with an animated rubber stamp that leaves a red-ink “Spam” or whatever on the post you’re reporting.

We’ll get into what happens with reports, but here’s the first fork in the road: Would the Kendzior post have been reported? I think there are three categories of people that are interesting. First, Kendzior fans who are hip to Johnny Cash, get the reference, snicker, and move on. Second, followers who think “ouch, that could be misinterpreted”; they might throw a comment onto the post or just maybe report it. Third, Reply Guys who’ll jump at any chance to take a vocal woman down; they’d gleefully report her en masse. There’s no way to predict what would have happened, but it wouldn’t be surprising if there were both categories of report, or either, or none.

Moderating

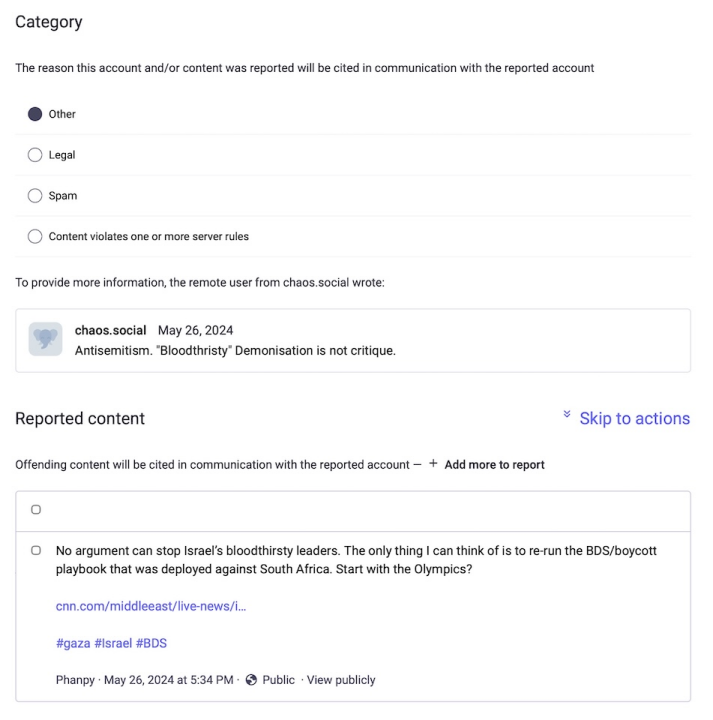

When you file a report, it goes both to the moderators of your instance and the of instance where it was posted (who oversee the poster’s account). I dug up a 2024 report someone filed against me to give a feeling for what the moderator experience is like.

I think it’s reasonably self-explanatory. Note that the account that filed the report is not identified, but that the server it came from is.

A lot of reports are just handled quickly by a single moderator and don’t take much thought: Bitcoin scammer or Bill Gates impersonator or someone with a swastika in their profile? Serious report, treated seriously.

Others require some work. In the moderation screen, just below the part on display above, there’s space for moderators to discuss what to do. (In this particular case they decided that criticism of political leadership wasn’t “antisemitism” and resolved the report with no action.)

In the Kendzior case, what might the moderators have done? The answer, as usual, is “it depends”. If there were just one or two reports and they leaned on terminology like “bitch” and “woke”, quite possibly they would have been dismissed.

If one or more reports were heartfelt expressions of revulsion or trauma at what seemed to be a hideous death threat, the moderators might well have decided to take action. Similarly if the reports were from people who’d got the reference and snickered but then decided that there really should have been a “just kidding” addendum.

Action

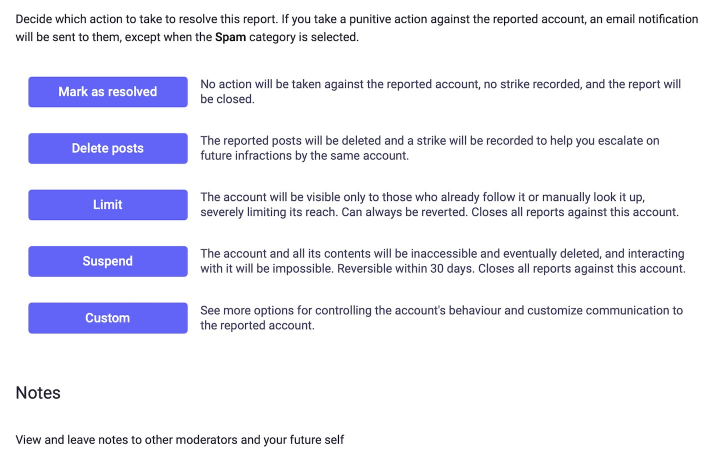

Here are the actions a moderator can take.

If you select “Custom”, you get this:

Once again, I think these are self-explanatory. Before taking up the question of what might happen in the Kendzior case, I should grant that moderators are just people, and sometimes they’re the wrong people. There have been servers with a reputation for draconian moderation on posts that are even moderately controversial. They typically haven’t done very well in terms of attracting and retaining members.

OK, what might happen in the Kendzior case? I’m pretty sure there are servers out there where the post would just have been deleted. But my bet is on that “Send a warning” option. Where the warning might go something like “That post of yours really shook up some people who didn’t get the Folsom Prison Blues reference and you should really update it somehow to make it clear you’re not serious.”

Typically, people who get that kind of moderation message take it seriously. If not, the moderator can just delete the post. And if the person makes it clear they’re not going to co-operate, that creates a serious risk that if you let them go on shaking people up, your server could get mass-defederated, which is the death penalty. So (after some discussion) they’d delete the account. Everyone has the right to free speech, but nobody has a right to an audience courtesy of our server.

Bottom line

It is very, very unlikely that in the Mastodon universe, Sarah Kendzior’s account would suddenly have globally vanished. It is quite likely that the shot-a-man post would have been edited appropriately, and possible that it would have just vanished.

Will it scale?

I think the possible outcomes I suggested above are, well, OK. I think the process I’ve described is also OK. The question arises as to whether this will hold together as the Fediverse grows by orders of magnitude.

I think so? People are working hard on moderation tools. I think this could be an area where AI would help, by highlighting possible problems for moderators in the same way that it highlights spots-to-look-at today for radiologists. We’ll see.

There are also a couple of background realities that we should be paying more attention to. First, bad actors tend to cluster on bad servers, simply because non-bad servers take moderation seriously. The defederation scalpel needs to be kept sharp and kept nearby.

Secondly, I’m pretty convinced that the current open-enrollment policy adopted by many servers, where anyone can have an account just by asking for it, will eventually have to be phased out. Even a tiny barrier to entry — a few words on why you want to join or, even better, a small payment — is going to reduce the frequency of troublemakers to an amazing degree.

Take-aways

Well, now you know how moderation works in the Fediverse. You’ll have to make up your own mind about whether you like it.

Time to Migrate 3 Nov 2025, 8:00 pm

Dear World: Now is a good time to get off social media that’s going downhill. Where by “downhill” I mean any combination of “less useful”, “less safe”, or “less fun”. This month marks the third anniversary of my Mastodon migration and I’m convinced that right now, in late 2025, it’s the best place to go. Come join me. Here’s why.

Defining terms

In this post, by “Social media” I mean “what Twitter used to be, back when it was good”. We should expect our social-media future to be at least as useful, safe, and fun as that baseline. (But we can do better!)

By “Mastodon” I mean the many servers, mostly running the Mastodon software, that communicate using the ActivityPub protocol. Now I’ll try to convince you to start using one of them.

Start at joinmastodon.org

The simplest argument

Have you noticed that social-media products, in the long term, can’t seem to manage to stay fun and safe and useful? I have. But there’s one huge exception, a tool that’s been serving billions of us for decades, and works about as well as it ever did. I’m talking about email.

Why does email stay reasonably healthy? Because nobody owns it. Anyone on any server can communicate with anyone else on any other and it Just Works. Nobody can buy it and make it a vehicle for their politics. Nobody can crank up the ad density or make things worse to improve their profit margin.

Mastodon’s like email that way. Plus it does all the Post and Repost and Quote and Follow and Reply and Like and Block stuff that you’re used to, and there are thousands of servers and anyone can run one and nobody can own the whole thing. It doesn’t have ads and it won’t. It’s dead easy to use and it’s fun and you should give it a try.

The rest of this essay goes into detail about why Mastodon is generally great and specifically better than the alternatives. But if that simple pitch sounded good, stop here, go get an account and climb on board.

Why now?

Two things motivated me to post this piece now. First, this month is my three-year anniversary of bailing out on Twitter in favor of Mastodon.

Second is the release of Mastodon 4.5, which I think closes the last few important-missing-feature gaps.

The software is improving rapidly, particularly in the last couple of releases. It’s got cool features you won’t find elsewhere, and there’s very little cool stuff from elsewhere that’s not here. There was a time when newly-arrived people had confusing or unfriendly experiences, or missed features that were important to them. It looks to me like those days are over.

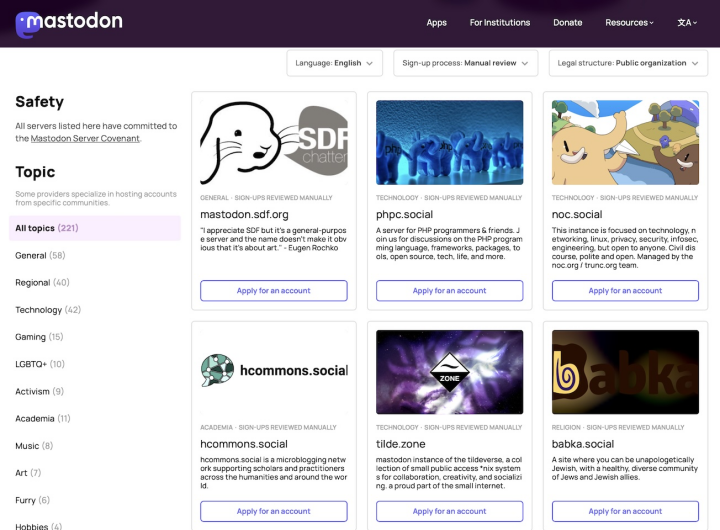

Migration

Mastodon is many thousands of servers, and you can join the biggest, mastodon.social, or shop around for another. But here’s the magic thing: If you end up disliking the server you’re on, or find a better one, you can migrate and take your followers with you! You can’t ever get locked in.

The server-selection menu has lots of options.

This is probably Mastodon’s most important feature. It’s why no billionaire can buy it and no corporation can enshittify it. As far as I know, Mastodon is the first widely-adopted social software ever to offer this.

Interaction

You hear it over and over: “I had <a big number> of followers on Twitter and now I have <a less-big number> on Mastodon, but I get so much more conversation and interaction when I post here.”

One of the people you’ll hear that from is me. My follower count is less than half the 45K I had on Twitter-that-was, but I get immensely more intelligent, friendly interaction than I ever got there. (And then sometimes I get told firmly that I’m wrong about this or that, but hey.) It’s the best social-media experience I’ve ever had.

Dunno about you, but conversation and interaction seem like a big deal to me. One reason things are lively is…

Sex

Here’s an axiom: An ad-supported service can’t have sex-positive or explicit content. Advertisers simply won’t tolerate having their message appear beside NSFW images or Gay-Leatherman tales or exuberant trans-positivity. Mastodon can.

Of course, you gotta be reasonable, posting anything actually illegal will get your ass perma-blocked and your account suspended. So will posting anything that’s NSFW etc without a “Content Warning”. That’s a built-in feature of Mastodon which puts a little warning (“#NSFW” and “#Lewd” are popular) above your post, which is tastefully blurred-out until whoever’s looking at it clicks on “Show content”. I use these all the time when I post about #baseball or #fútbol because a lot of the geeks and greens who follow me are pointedly uninterested in sports.

(Oh, typing that in reminds me that you can subscribe to hashtags on Mastodon: Let’s see, I currently subscribe to, among others, #Vancouver, #Murderbot, and #Fujifilm.)

The “Ivory for Mastodon” app for Apple platforms,

one of the many fine alternative clients.

Moderation and defederation

Did I just mention, two paragraphs up, getting blocked? Mastodon isn’t free of griefers, but the tools to fight them are good and getting better.

The good news is that each server moderates its own members. So there’s some variation of the standards from server to server, but less than you’d think. Since there are thousands of servers, there are thousands of moderators, which is a lot.